Broadcom's AI Infrastructure and XPU Business: Driving a Trillion-Dollar Valuation

How a strategic partnership with Google on TPUs and others on XPUs, coupled with networking, is counterbalancing NVIDIA's rise

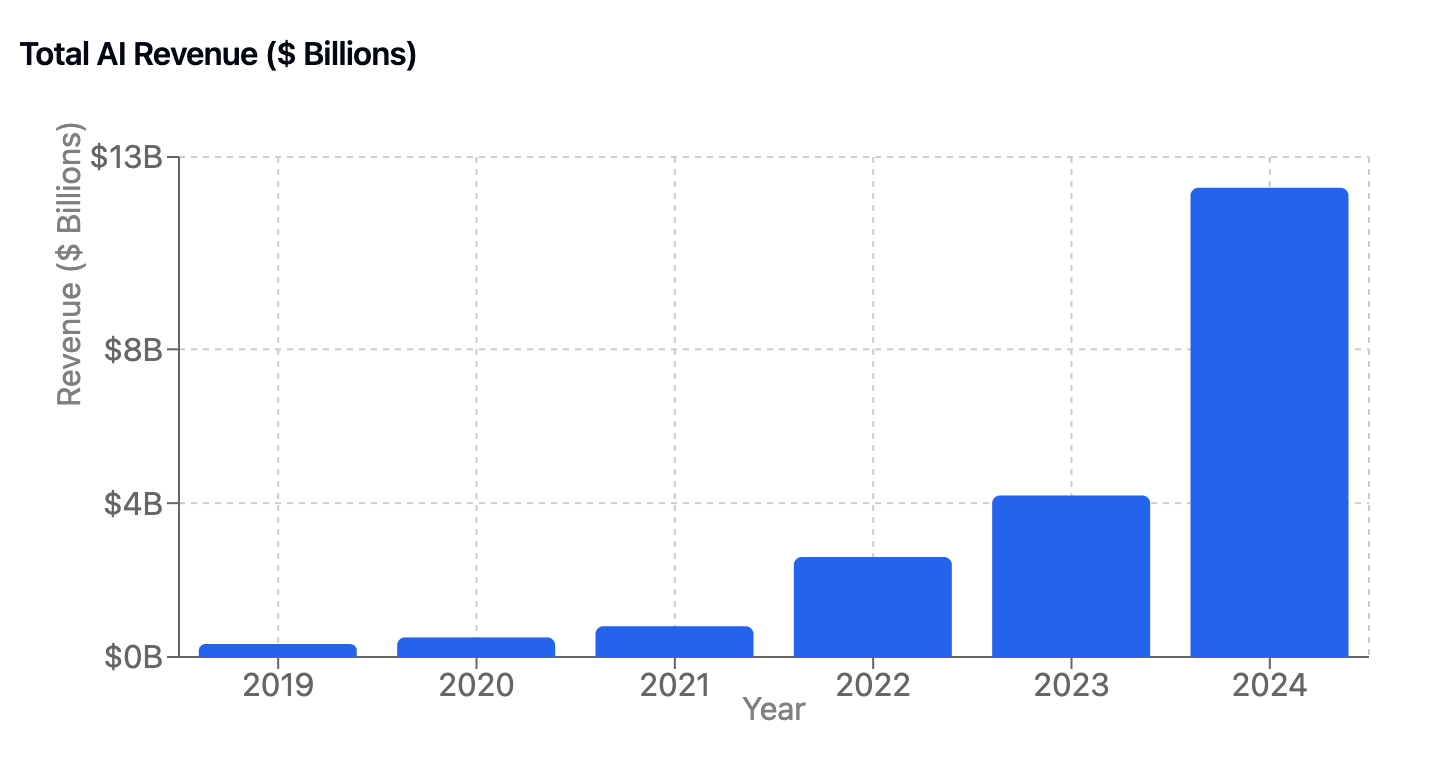

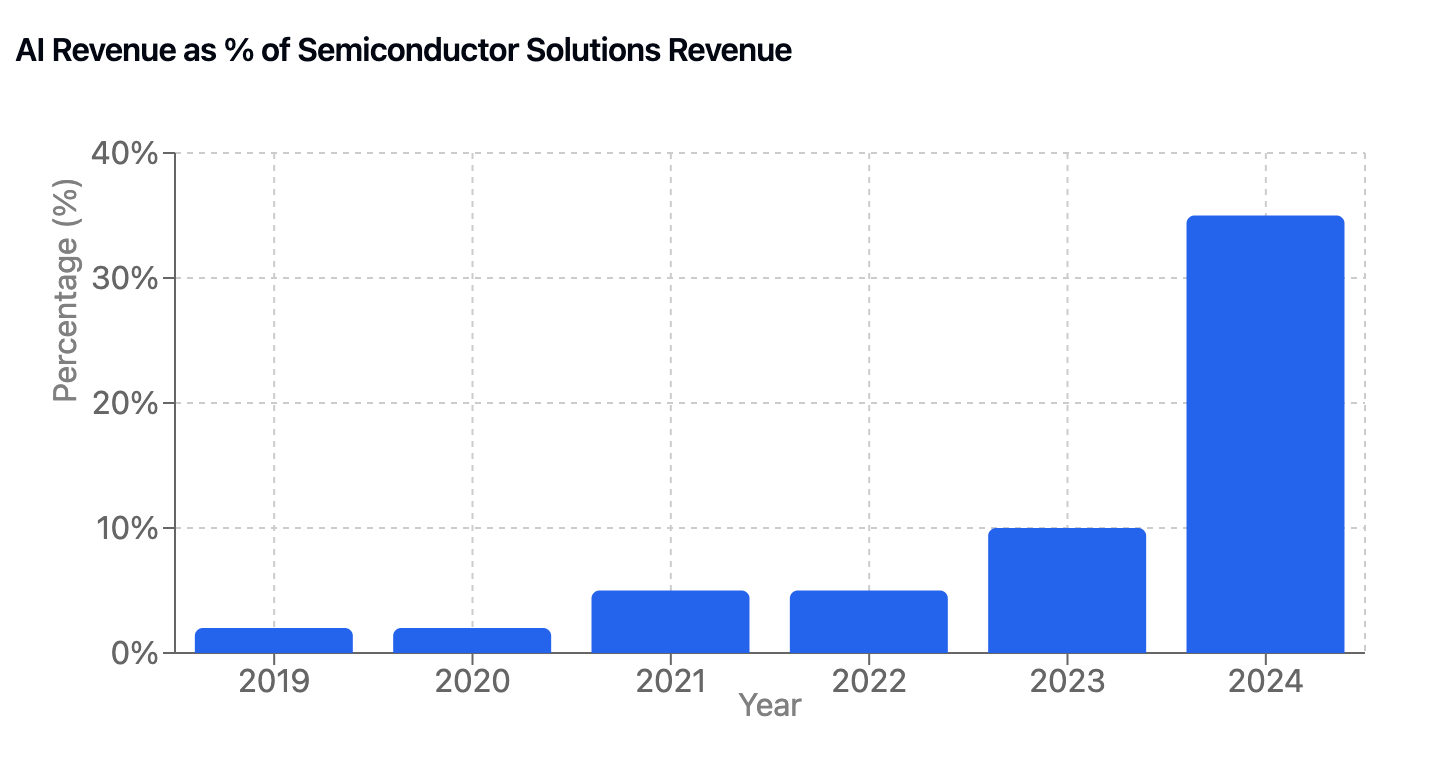

In early 2024, we highlighted how Hyperscaler Custom Silicon initiatives would likely become the biggest rival to NVIDIA. In breaking down Amazon, Microsoft, Google, and Meta’s supplier partners, one vendor was universal: Broadcom. These hyperscalers' long-term investments in custom chips are not only narrowing NVIDIA's lead but have become a substantial revenue stream for Broadcom. The company's AI-related revenue surged to $12.2B in fiscal year 2024, representing over 200% growth. This performance catapulted Broadcom's stock value, driving a 24% increase and pushing its market capitalization above $1 trillion – making it the 9th technology company to reach this milestone, following Apple, NVIDIA, Microsoft, Google, Amazon, Meta, Tesla, and TSMC.

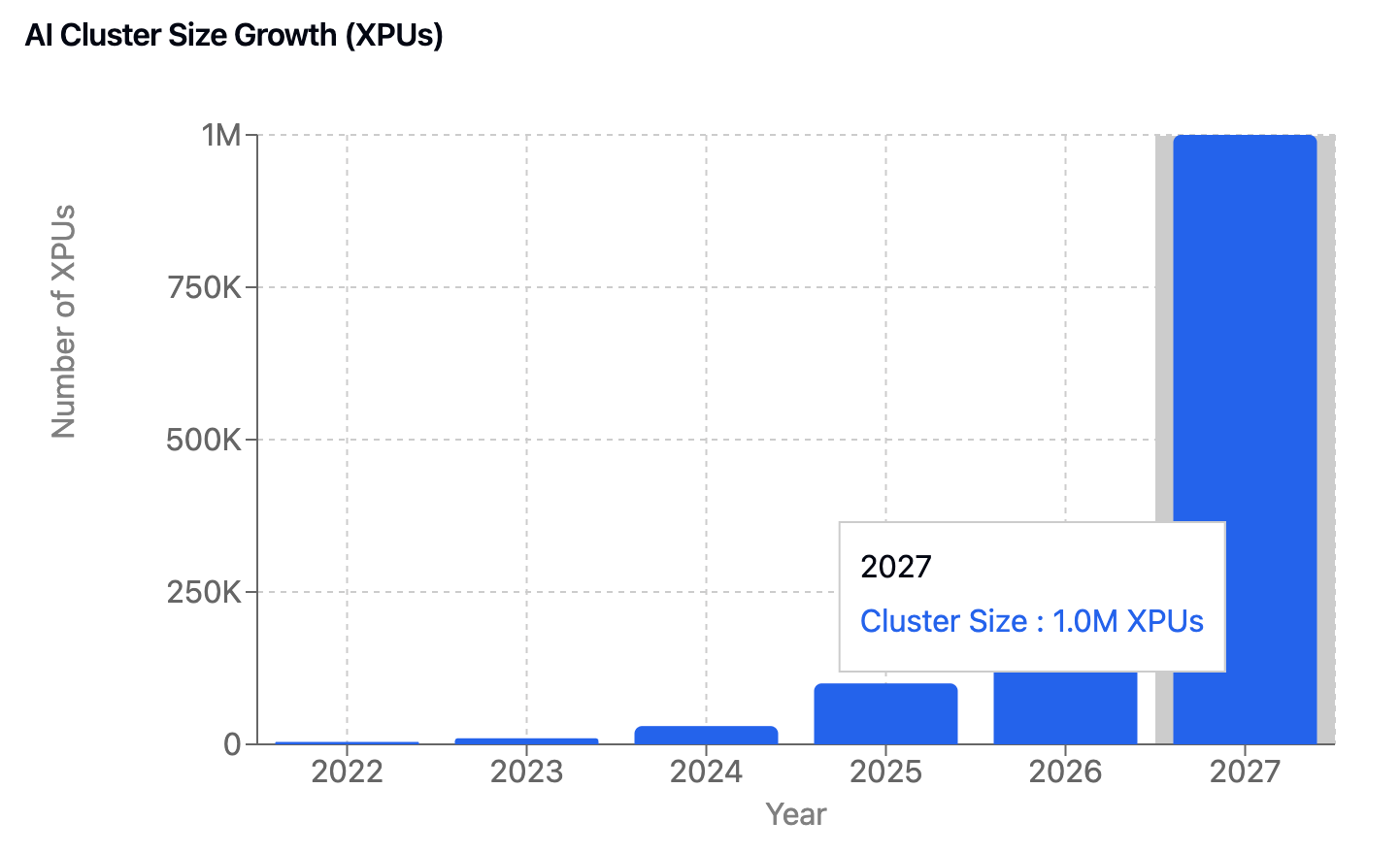

Scaling to 1M XPU Hyperscaler Clusters

Broadcom's ascent in AI infrastructure wasn't sudden - it reflects a decade-long strategic bet on custom silicon, where Broadcom has beaten rival Marvell. While other semiconductor companies focused on general-purpose AI chips, Broadcom quietly built deep partnerships with hyperscalers, particularly Google, where they've delivered ten generations of custom AI accelerators (TPUs) since 2014. This long-term collaboration has given Broadcom unique insights into hyperscale AI infrastructure needs and the ability to optimize across the entire stack - from custom accelerators to networking, interconnects, and optics.

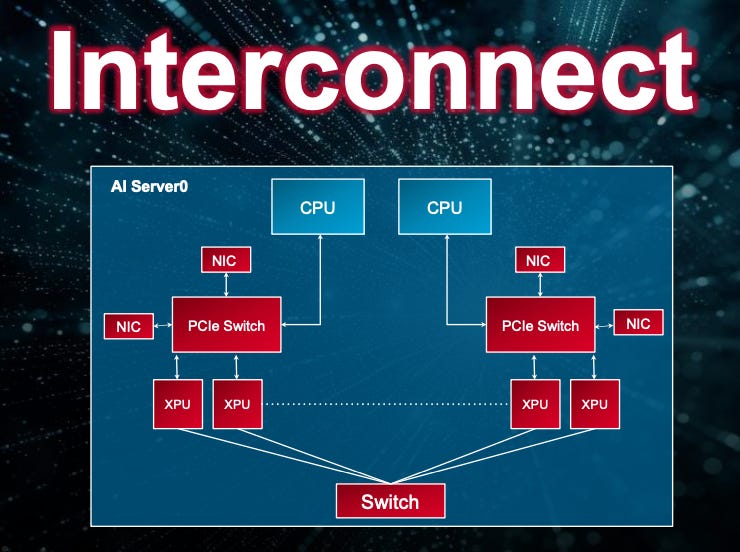

What sets Broadcom apart is their unique position as both a networking powerhouse and custom silicon leader. With over $3 billion in annual R&D investment on custom AI silicon alone and over $10B of total R&D, they've built an end-to-end semiconductor portfolio that spans from custom AI accelerators to the complex fabric that connects them. Their offerings aren't just individual products but a complete infrastructure solution: custom XPUs that integrate with their industry-leading Tomahawk and Jericho3-AI switches, Thor2 NICs for optimized networking, advanced PCIe solutions for chip-to-chip communication, and next-generation optical interconnects. The numbers tell the story: their PCIe solutions offer 40% greater reach than competitors, their co-packaged optics reduce power consumption by 70%, and their networking stack outperforms traditional HPC interconnects like InfiniBand by over 10% in job completion time.

This comprehensive approach has made Broadcom the go-to partner for hyperscalers building next-generation AI infrastructure. From Google's custom TPU program to Meta's AI accelerators, Broadcom's ability to optimize across the entire stack has proven crucial for achieving the performance, power efficiency, and scale these customers demand. Their multi-generational partnerships now extend well into 2026 and beyond, showing the long-term strategic value they provide.

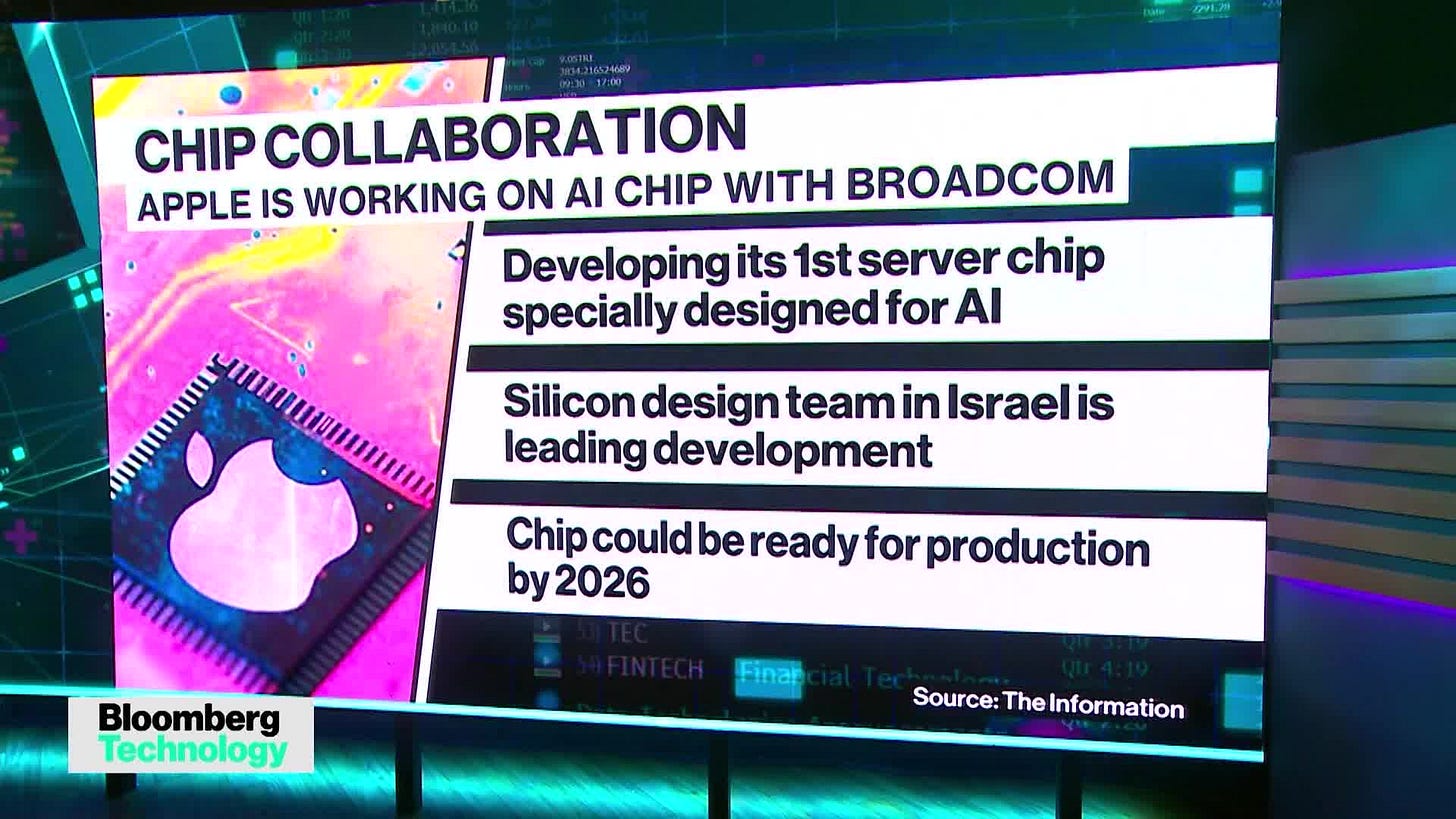

Broadcom’s latest win: Apple

In a significant move that underscores Broadcom's critical role in AI infrastructure, Apple has partnered with Broadcom to develop its first AI server chip, code-named Baltra. The chip, slated for mass production in 2026, aims to power Apple's growing AI ambitions, particularly its new "Apple Intelligence" features that generate and proofread text, create images, and summarize content across billions of iOS devices. While Apple's current Mac processors can handle basic AI tasks, they aren't optimized for the intense computing demands of more complex AI features, creating a bottleneck as Apple scales its AI services.

Unlike competitors who rely on Nvidia's chips, Apple is taking a characteristically vertical approach, designing its own AI server chips with Broadcom's networking technology. The partnership focuses on one of the most crucial challenges in AI computing: efficiently linking multiple chips together to process the massive amounts of data required for inference workloads. This collaboration reflects a broader industry trend where tech giants are increasingly partnering with Broadcom to build custom AI infrastructure that offers better performance, control, and cost efficiency than off-the-shelf solutions.

Conclusion

Broadcom's rise to a trillion-dollar valuation reflects a pivotal shift in AI infrastructure. While Nvidia dominates general-purpose AI chips, Broadcom has emerged as the crucial partner enabling hyperscalers to build custom silicon solutions at massive scale. With $12.2B in AI revenue growing over 200%, deep partnerships from Google to Apple, and technology that spans from custom accelerators to networking, Broadcom has transformed from a networking specialist into an essential architect of next-generation AI infrastructure. As the industry pushes toward million-chip clusters, Broadcom's comprehensive approach positions them to remain at the forefront of AI's next phase of growth.