OpenAI and NVIDIA’s 10 GW, $100B Bet: Power, Partners, and Breaking Free from Microsoft

Why Nvidia, OpenAI, and Oracle need each other more than Microsoft—and how AI’s future is being built in megawatts, not models

OpenAI isn’t just buying GPUs—it’s trying to assemble ~10 GW of Nvidia-backed compute capacity. That’s roughly ten nuclear reactors’ worth of power, all aimed at running AI. At this point, the real scarce resource isn’t models or algorithms, it’s megawatts.

Side note: Greg seems to have adopted Jensen Huang’s signature Tom Ford leather jacket look for the announcement. The leather jackets are a signature look for Jensen as he reportedly owns over 50 of them and wears them almost exclusively at public events. The look has evolved into a brand symbol—so much so that analysts often call NVIDIA launches “the leather jacket show.” Jensen seems to appreciate strong American engineering and have to admit, it’s a sharp look!

Scale check

10 GW ≈ 4–5M GPUs (depending on racks and efficiency). That’s effectively Nvidia’s entire 2025 production and nearly double last year’s shipments.

When Jensen Huang called the deal “monumental,” it wasn’t marketing—it was literally about the industrial scale of the project.

Financing geometry

Nvidia invests $10B in equity for every 1 GW OpenAI deploys, up to $100B at 10 GW.

For every ~$35B of GPU orders, Nvidia chips in ~$10B of its own stock. Net effect: OpenAI pays ~71% in cash, ~29% in equity.

In plain terms, Nvidia is partially financing its own sales—a structure that ensures demand while tying OpenAI closer to its roadmap.

Breaking from Microsoft

This scale-up also highlights a deeper shift: OpenAI loosening its dependency on Microsoft.

Microsoft pushed hard to block OpenAI’s Windsurf acquisition, worried it would threaten GitHub Copilot’s moat.

The partnership has grown tense: Microsoft wants OpenAI locked into Azure, while OpenAI wants flexibility to chase cheaper, more abundant capacity.

By cutting deals with Oracle for cloud and Nvidia for capital + silicon, OpenAI is deliberately hedging away from Microsoft’s grip.

The “enemy of my enemy” logic

Nvidia is equally motivated and pulling a Michael Corleone to seize power. The big three clouds—Amazon, Google, Microsoft—are all investing in their own silicon to escape Nvidia’s margins. So Nvidia has gone the opposite way:

Prop up challenger clouds like Oracle, CoreWeave, Nebius, Crusoe.

Seed alternative capacity pools that keep demand diversified and hyperscaler leverage in check.

In that light, Nvidia and OpenAI’s alignment is classic: the enemy of my enemy is my friend.

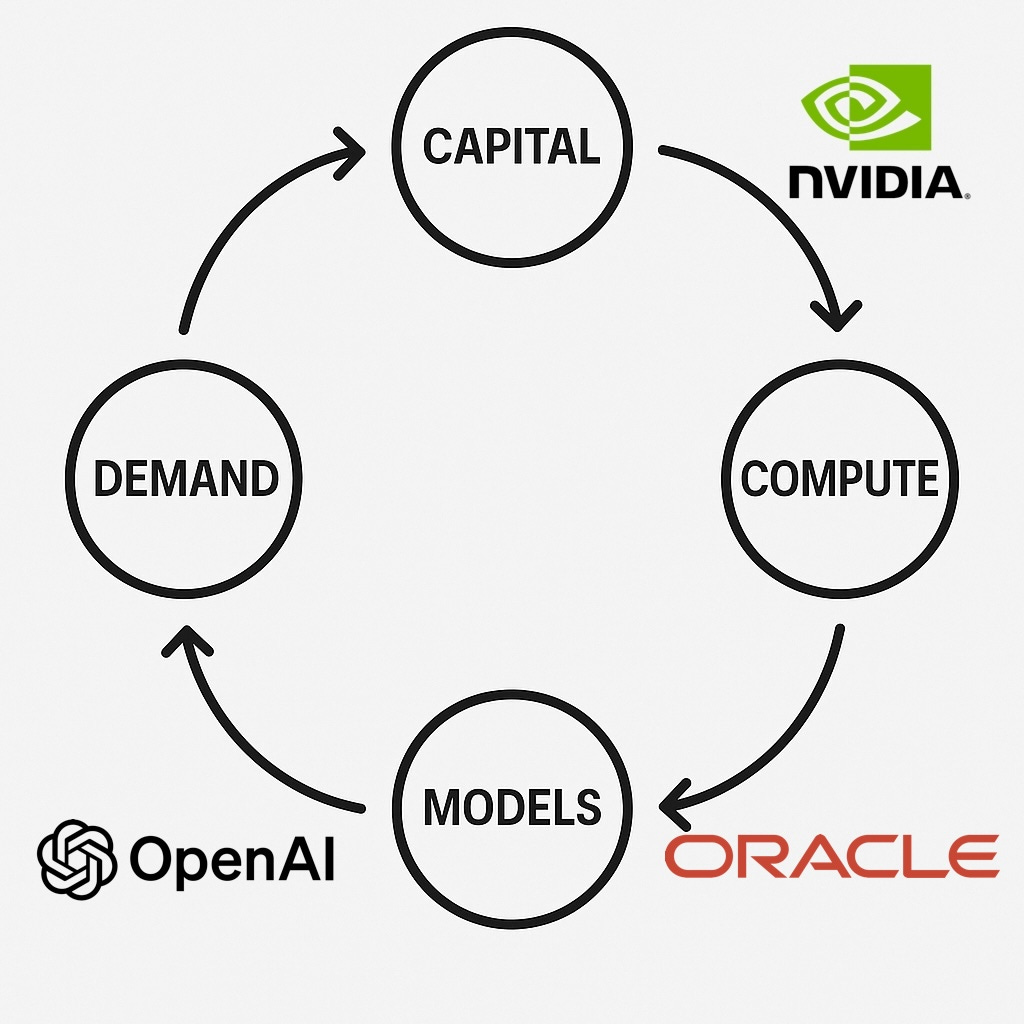

The capital loop

The money flows almost like a cartoon: OpenAI spends with Oracle → Oracle buys from Nvidia → Nvidia invests into OpenAI → repeat. On paper it looks circular, even bubble-like—capital bouncing between the same three names.

But the distinction is that each spin leaves behind physical assets: GPUs shipped, racks powered up, substations wired, models deployed to hundreds of millions of users. It’s not just numbers recycling across balance sheets—it’s dollars hardening into infrastructure.

The historical rhyme

This isn’t tulips or banner ads. The better analogies are railroads in the 1800s or fiber buildouts in the early 2000s. Overcapacity wiped out equity holders in the short term, but the infrastructure remained and became foundational.

Risks

Power access: interconnect queues and substations are the true bottleneck now, not chips.

HBM supply: memory packaging could limit throughput regardless of how many GPUs are bought.

Utilization: 10 GW of idling silicon would crater returns; keeping GPUs busy is existential.

Policy: tariffs, permitting, or AI regulation could reshape the economics overnight.

Investor lens

Watch LCOC (levelized cost of compute): who can turn megawatts into tokens cheapest?

Vendor capital as moat: Nvidia financing its own demand gives it stickiness no startup chip vendor can replicate.

Second-order bets: optics, HBM, advanced packaging, switchgear, immersion cooling—all benefit from this cycle no matter who “wins” the model race.

Bottom line

OpenAI’s 10 GW plan isn’t just capex—it’s a bid for independence from Microsoft, fueled by Nvidia’s need to weaken the hyperscaler triopoly. The capital loop may look like a meme, but it’s also a flywheel: capital → compute → models → demand → back to capital.

The question isn’t whether the cycle makes sense—it’s who will still be standing when the music slows.

how do you see open AI + AMD deal this week in this flywheel?