The AI-first transformation of the Data Center: Insights from Nvidia's Q3 2024 Earnings

Large Language Models and AI workloads power an infrastructure supercycle

Nvidia pioneered the accelerated computing movement in 1999 with the advent of the GPU, though its real inflection came nearly 20 years later with the adoption of the AI-first data center. In the past couple of years, the data center has seen a massive shift towards AI-first workloads which rely on its GPU. See our previous writeup on the data center in the age of AI and the derivative implications. While the world has attentively watched the drama unfold at OpenAI, Nvidia has quietly reached $1.2T of market capitalization with its AI chips being cited 31X more frequently than any other chip provider in research papers. Nvidia has the most valuable and mission critical ecosystem in AI:

NVIDIA GPUs, CPUs, networking, AI foundry services and NVIDIA AI Enterprise software are all growth engines in full throttle. Today, the company released its investor presentation and earnings report. Here are the highlights from Nvidia’s Q3 of fiscal 2024:

$18.12B of quarterly revenue, up 206% from a year ago and 34% from just Q2!

Record Data Center revenue of $14.51 billion, up 41% from Q2, up 279% from year ago

Nvidia’s Data Center business, which used to be a small part of its historic business in gaming, demonstrates the AI-first transformation of the data center

Nvidia’s Data Center business operates at 85% gross margins

Earnings per share of $3.71, up 12X in just a year and 50% from the prior quarter

While these figures are truly staggering, Nvidia’s penetration of total global data center infrastructure is quite low. Where its penetration asymptotes is largely a function of the end user adoption of AI-first workloads:

On the product side, the company announced:

Introduced an AI foundry service — with NVIDIA AI Foundation Models, NVIDIA NeMo™ framework and NVIDIA DGX™ Cloud AI supercomputing — to accelerate the development and tuning of custom generative AI applications, first available on Microsoft Azure, with SAP and Amdocs among the first customers.

Announced NVIDIA HGX™ H200 with the new NVIDIA H200 Tensor Core GPU, the first GPU with HBM3e memory, with systems expected to be available in the second quarter of next year.

Announced that the NVIDIA Spectrum-X™ Ethernet networking platform for AI will be integrated into servers from Dell Technologies, Hewlett Packard Enterprise and Lenovo in the first quarter of next year.

Made advances with global cloud service providers:

Google Cloud Platform made generally available new A3 instances powered by NVIDIA H100 Tensor Core GPUs and NVIDIA AI Enterprise software in Google Cloud Marketplace.

Microsoft Azure will be offering customers access to NVIDIA Omniverse™ Cloud Services for accelerating automotive digitalization, as well as new instances featuring NVL H100 Tensor Core GPUs and H100 with confidential computing, with H200 GPUs coming next year.

Oracle Cloud Infrastructure made NVIDIA DGX Cloud and NVIDIA AI Enterprise software available in Oracle Cloud Marketplace.

Partnered with a range of leading companies on AI initiatives, including Amdocs, Dropbox, Foxconn, Genentech (member of Roche Group), Infosys, Lenovo, Reliance Industries, Scaleway and Tata Group.

Announced record-setting performance in the latest two sets of MLPerf benchmarks for inference and training, with the NVIDIA Eos AI supercomputer training a GPT-3 model 3x faster than the previous record.

Announced growing worldwide support for the NVIDIA® CUDA® Quantum platform, including new efforts in Israel, the Netherlands, the U.K. and the U.S.

This combination of growth and profitability at this scale is unprecedented — providing evidence to the unique moment in time in the AI-first infastructure supercycle. Even its gaming unit is humming, at $2.86B of quarterly revenue, up 81% year over year… Nvidia’s main catalysts are LLM startups, technology companies, global/regional cloud service providers and enterprises investing in infrastructure ahead of anticipated AI application demand.

The AI-first transformation of the Data Center

Accelerated computing requires full-stack and data center-scale innovation across silicon, systems, algorithms and applications. Significant expertise and effort are required, but application speed-ups can be incredible, resulting in dramatic cost and time-to-solution savings. For example, 2 NVIDIA HGX nodes with 16 NVIDIA H100 GPUs that cost $400K can replace 960 nodes of CPU servers that cost $10M for the same LLM workload. NVIDIA chips carry the value of the full-stack, not just the chip.

To illustrate how massive this data center transformation is, consider Microsoft investing over $50B of annual spend on data centers — more than any other infrastructure project in history including government programs!

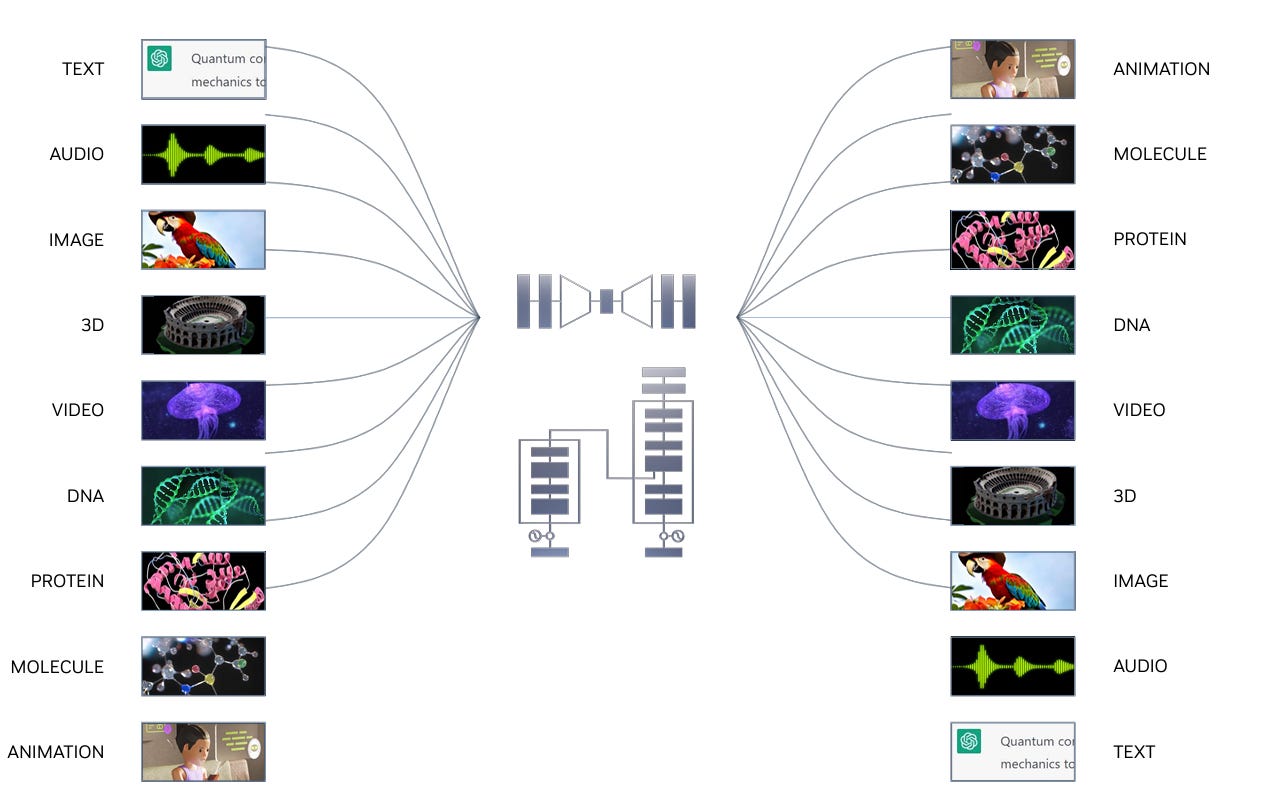

Accelerated computing is needed to move past Moore’s law and tackle the most impactful opportunities of our time—like AI, climate simulation, drug discovery, ray tracing, and robotics. NVIDIA is uniquely dedicated to accelerated computing —working top-to-bottom—refactoring applications and creating new algorithms, and bottom-to-top—inventing new specialized processors, like RT Core and Tensor Core.

For nearly every use case in AI, Nvidia’s accelerated computing platform is the backbone of the AI-first compute workloads needed to power AI applications:

To illustrate the above use cases via a technical diagram, see below to see how Nvidia works with data vendors and powers RAG use cases for AI-first applications:

On a go-forward basis, Nvidia guided to $20B of quarterly revenue for next quarter — 10% quarter-over-quarter growth from today and putting the company close to achieving $100B of annualized revenue, at overall 75% gross margins. Nvidia is the clear #1 in AI and its position as the 800LB gorilla in infrastructure can’t be underappreciated…