Hyperscaler AI Custom Chips (ASIC), CPUs and Networking Initiatives

Breaking down the supply chain of hyperscaler AI custom chip development

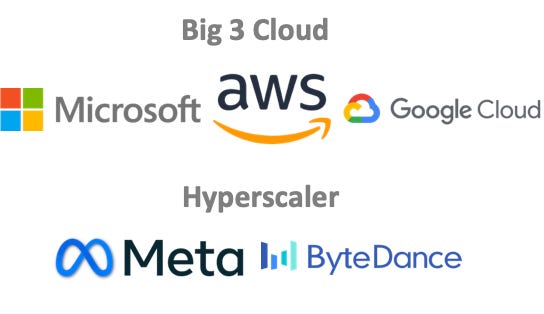

As hyperscalers seek to reduce their reliance on NVIDIA and optimize for specific workloads, big cloud providers are increasingly developing their own AI Application-Specific Integrated Circuits (ASICs). The AI ASIC ecosystem is the equal and opposite reaction to the rise of NVIDIA, with Big 3 Clouds aiming to reduce reliability on third-party NVIDIA and differentiate with their own offerings. This move, also evident in companies like Meta and Bytedance, aims to diminish dependencies on third-party suppliers in hardware infrastructure.

Through AI ASICs, these companies aim for technological independence and enhanced performance, crucial for their scaling needs and future innovations.

Pros of AI ASICs:

Optimized Performance: Tailored specifically for set tasks, offering improved efficiency (i.e. LLM inference; Newsfeed)

Cost Reduction: Lower operational costs by avoiding third-party hardware.

Energy Efficiency: Custom ASICs consume less power, important in large data centers.

Enhanced Security: Direct control over hardware enhances data security and privacy.

Cons of AI ASICs:

High Initial Costs: Significant investment in design and manufacturing.

Inflexibility: Hard to reprogram or adapt to new technologies.

Long Development Time: Delays from design to deployment can be substantial.

Obsolescence Risk: Rapid technological advances can quickly make custom ASICs outdated.

Generated with DALLE: depicting NVIDIA as the giant Goliath and the smaller yet determined figures of Google, Microsoft, AWS, and Broadcom as modern-day Davids. This scene illustrates the fierce competition in the AI hardware market.

Google TPU (AI ASIC)

Google TPUs: Google's Tensor Processing Units (TPUs) are the cornerstone pillar to Google’s AI strategy, with the company investing heavily—around $8 billion per year with Broadcom alone (!!!)—to develop these specialized processors. These TPUs are pivotal for running and training complex machine learning models internally, including Google's Gemini model, and are integral to the services offered through Google Cloud Platform (GCP).

Google's TPUs are the key pillar to both their internal AI/ML operations and as well as GCP's enterprise offerings, enhancing their ability to run AI workloads efficiently and cost-effectively. This technological evolution, from initial designs focused on integer operations to the latest TPU v4 models supporting floating-point calculations and achieving exascale performance, has solidified Google's lead in AI ASIC development by offering superior price-performance across successive TPU generations.

Suppliers: Google's TPU AI ASIC processor family has been supplied and designed alongside Broadcom across multiple generations, at 7nm, 5nm, and 3nm. In addition to powering Google's TPUs, Broadcom also equips Google's networking initiatives with the Marvell PAM DSP and Astera PCIe retimer, key components that enhance high-speed data transfer and network reliability. These elements underscore Broadcom's significant role in providing the sophisticated infrastructure that supports both the computing and networking backbone of Google's technological ecosystem.

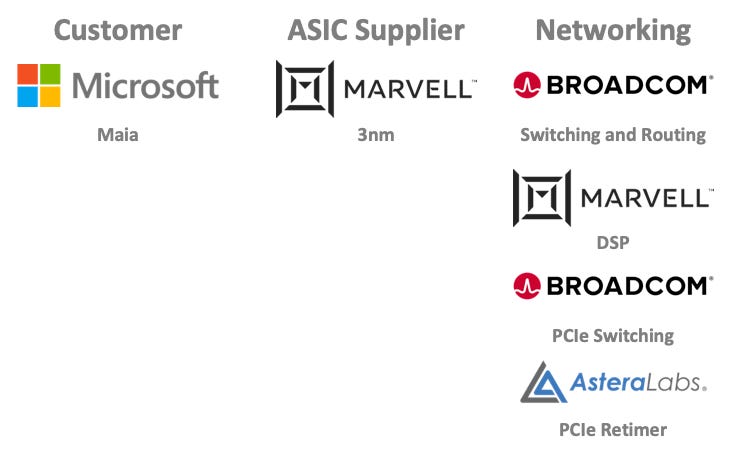

Microsoft Maia (AI ASIC)

Microsoft’s AI ASIC: Microsoft's Maia AI Accelerator is a cornerstone of Azure's AI infrastructure, tailored to enhance cloud-based AI services. Designed alongside Marvell and developed on a 3nm process by TSMC, Maia 100 is designed to efficiently handle demanding AI tasks, reflecting Microsoft's shift towards integrating custom silicon to boost performance and reduce reliance on external hardware sources (Azure) (Source). This AI accelerator is equipped with advanced cooling solutions and custom power distribution systems to meet the intensive power and thermal management needs of AI computations, aligning with Microsoft's sustainability goals by improving data center energy efficiency (Tom's Hardware).

Suppliers: Microsoft collaborates with multiple suppliers to develop its AI and networking capabilities. The Maia AI ASIC processor family is built using Marvell's 3nm technology, reflecting Microsoft's focus on powerful AI processing. For networking, Microsoft employs Marvell's PAM DSP, Broadcom's PCIe switching, and Astera's PCIe retimer to enhance network performance and reliability. Additionally, Microsoft works with Arista to further improve networking efficiency and scalability, ensuring robust infrastructure support for its cloud services. These partnerships underline Microsoft's approach to leveraging specialist technologies to strengthen its service offerings.

Amazon Trainium and Inferentia (AI ASIC)

AWS Inferentia and Trainium (AI ASIC): Amazon began its journey into custom AI ASIC development with the creation of the Trainium and Inferentia series, which are now starting to get to production AI workloads. Trainium chips are particularly adept at training deep learning models efficiently and cost-effectively, making them suitable for handling extensive datasets. Despite these advancements, Amazon continues to face challenges transitioning AI workloads from NVIDIA's CUDA platform, necessitating significant software development and ecosystem support to ensure seamless integration on its custom chips (GeekWire) (TrendForce). AWS appears behind Google TPUs and Microsoft in its custom AI ASICs.

Big 3 Cloud CPUs:

The Big 3 cloud providers—AWS, Azure, and Google Cloud (GCP)—collectively utilize an estimated 50.4 million CPUs in their infrastructure, derived from their market share and the typical server configurations. Specifically, AWS has approximately 25.6 million CPUs, Azure has 16 million, and GCP has 8 million CPUs. These CPUs are predominantly based on ARM architecture, chosen over traditional Intel or AMD processors primarily due to cost efficiency and power consumption benefits.

Estimates suggest that using ARM-based processors can offer significant cost advantages over traditional Intel CPUs, often cited >40% better price performance. A typical Intel CPU costs about $1,000 versus an ARM CPU at $600. In total, it’s estimated working with ARM saved the Big 3 Clouds over $20B. This cost efficiency, combined with lower power requirements, presents a compelling case for these cloud giants to continue investing in ARM technology for their future developments (Amazon Web Services) (Wikipedia).

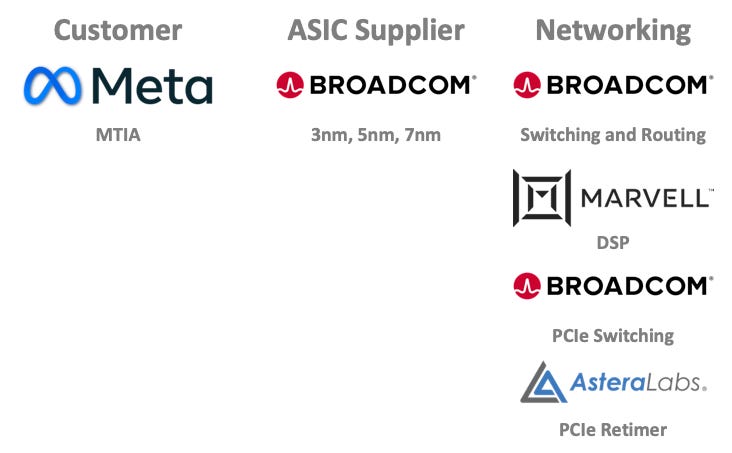

Meta MTIA (AI ASIC):

Meta’s MTIA is enhancing its processing capabilities through the development of custom AI ASIC processors, utilizing Broadcom's advanced semiconductor technologies across the 7nm, 5nm, and 3nm nodes. The addition of Broadcom's CEO, Hock Tan, to Meta's board signifies a strong partnership aimed at advancing Meta's technological infrastructure. Additionally, Meta leverages technology from Marvell for their networking needs, specifically utilizing Marvell's PAM DSP, Broadcom's PCIe switching, and Astera's PCIe retimer technologies to ensure robust and efficient data handling capabilities across its network. This strategic use of multiple suppliers underscores Meta's commitment to integrating leading-edge technology to support its vast operational demands.

Meta runs more inference operations globally than any other company, primarily across applications such as content recommendation (i.e. Instagram / FB), advertising targeting, and user behavior prediction. Some estimates say Meta runs over 2T inference workloads per day. It’s no wonder Zuck recently announced over $20B of investment in GPUs and AI Data Center infra.

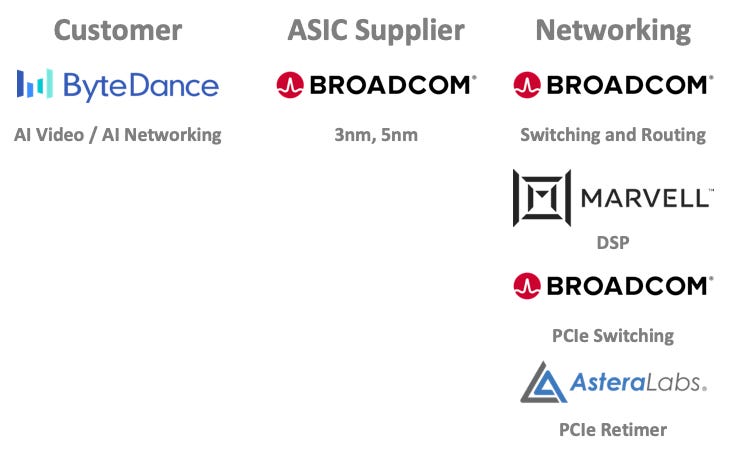

Bytedance AI Video / AI Networking (AI ASIC):

Bytedance is developing custom ASICs for AI video and networking using Broadcom's 5nm and 3nm technologies to enhance its platforms like TikTok. This initiative allows Bytedance to better handle intensive video processing tasks, reduce dependency on external suppliers, and improve control over its hardware ecosystem. By optimizing processing speed and power consumption, Bytedance aims to boost user experience and operational efficiency on its data-heavy platforms.

Conclusion

In conclusion, the development of custom AI ASICs by hyperscalers such as AWS, Azure, Google Cloud, Meta, and Bytedance represents a strategic move to counterbalance NVIDIA's dominance in the AI hardware market. Google's TPU initiative stands out as the most sizable project, with significant investments and advancements aimed at optimizing AI workloads. These custom ASIC projects are not just about enhancing performance and efficiency; they are pivotal in potentially bending the AI economics cost curve. By reducing dependency on traditional suppliers like NVIDIA and AMD in GPUs, hyperscalers are hoping to control their own destiny in AI usage in their applications.

Generated with DALL-E: NVIDIA as a monopolistic entity, soaking away money from Google, Microsoft, Amazon, Meta, and Bytedance. This illustration highlights the economic imbalance and NVIDIA's dominant position in the AI hardware market.

There is precedent for success with custom chip development in CPUs, with the Big 3 Clouds saving over $20B on over 50M CPUs developed alongside ARM. The ARM architecture massively reduced their reliance on Intel and AMD. We will see if the Big 3 Clouds and hyperscalers have success in the much more complicated world of AI ASICs and GPUs.

Hey Chris! I myself track the semiconductor space from GPU’s to XPU’s. I’m also intrigued by the CapEx the Hyperscalers are doling out and when if ever the still stop. I subscribed to your page, would be great if you could do the same for me! Thx.