Open-source vs. Proprietary Models

Will models trend towards commoditization; or will closed models like OpenAI and Anthropic capture all the margin with premium services?

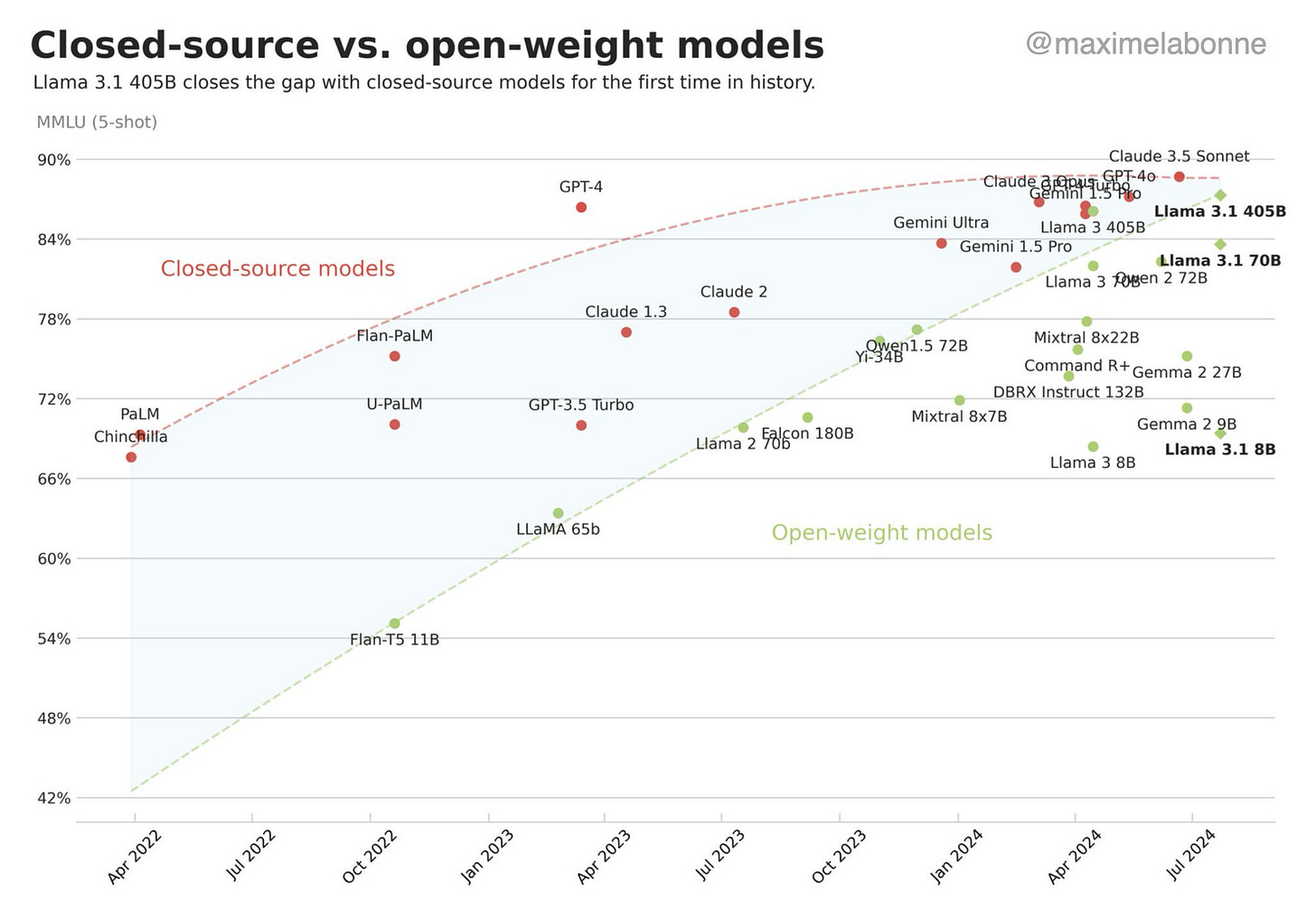

Just a year ago, proprietary AI models like GPT-4 and Claude 2 held a massive advantage over open-source players and others. Open-source models were hardly usable, including the initial version of Meta's LLaMa. No open-source player had billions in funding for AI data centers, like OpenAI, Anthropic, Cohere and other proprietary model players. Times are changing! Last week, Llama3.1 narrowed the performance gap with proprietary model vendors OpenAI and Anthropic.

Source: Benchmarks from Maxime Labonne

2022 and 2023: The race for compute

The dominance of closed-source models such as GPT-4 and Claude 2 was unchallenged in 2022 and 2023, with hardly any of the top practitioners in AI using open-source models. Training these *large* models was insanely capital-intensive, with early movers like OpenAI raising $11 billion and Anthropic raising $7.3 billion. Open-source models were underfunded and lacked the computing capacity needed to build accurate models. NVIDIA A/H100 chips were sold out for many quarters in advance, with AI developers scrambling to CoreWeave, Crusoe, Lambda and others to procure GPU supply. There were the have’s and have nots with GPUs, or as we put it, “the GPU Rich and the GPU Poor”.

2024: The rise of Meta, Mistral and open-source with meaningful compute

In 2024, open-source AI became GPU rich. Meta has invested $20 billion in AI-focused data centers to gain independence from third-party AI vendors, nearly matching OpenAI’s funding from Microsoft and others. Mistral, with nearly $1 billion in funding, has become one of the top model developers, giving Europe a horse in the race and bolstering open-source. Additionally, companies like Hugging Face and Databricks have magnified the open-source AI community by supporting and developing on these models.

As enterprises move from tinkering to deploying models in production, they face three main concerns:

Latency: Critical for time-sensitive AI applications.

Cost: Significant for compute-intensive AI applications.

Security: Important for data residency and preventing third-party models from ingesting private data.

Open-source models offer advantages in these areas, providing greater control, cost efficiency, and enhanced security. Model developer platforms like Fireworks.ai, Gradient.ai, Baseten, Together.ai, and Modal are enhancing the accessibility and usability of open-source AI for enterprises, making these technologies more practical and widespread. In fact, 4 of the 20 early and mid stage Enterprise Tech 30 companies were model developer platforms in 2024.

Today: Closed-Source vs Open-Source models comparative analysis

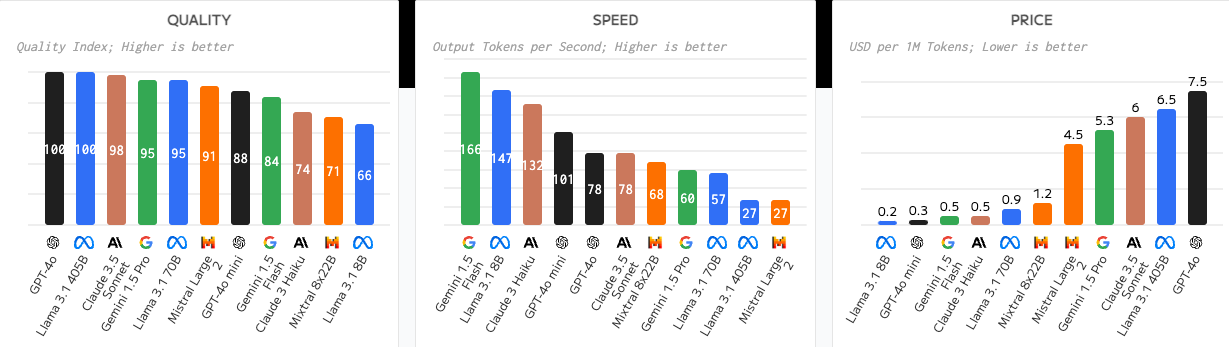

The performance gap between open and closed source models has narrowed, while other advantages of open-source models are becoming more apparent — notably security, latency and cost. However, open-source challenges remain in terms of scalability, and enterprise-grade reliability, which has given rise to model platforms mentioned above. Recent data and performance metrics indicate that open-weight models are rapidly closing the gap with their closed-source counterparts. The latest LLaMa 3.1 flagship model is competitive with leading foundation models across most tasks, including GPT-4, GPT-4o, and Claude 3.5.

Source: Introducing Llama 3.1, Meta AI blog

Across the ecosystem, model inference prices are dropping massively. OpenAI is aggressively cutting, with the cost of GPT-4o being 90% cheaper than GPT-4 a year ago. These price reductions were achieved alongside improvements in context length, latency, and knowledge cut-off dates — so not just pure price cuts. Despite these price cuts, OpenAI’s API revenue ($1.25 billion through OpenAI and $1 billion via Azure-OAI) has grown >10X in the past year.

Per public benchmarks done by Artificial Analysis, GPT-4o and Llama3.1 405B are tied on Quality, roughly the same on speed — but Llama3.1 405B is >7X cheaper. Further, Meta’s small 8B model is ~50% faster than OpenAI’s fastest model GPT-4o Mini and 50% cheaper than OpenAI’s cheapest model.

It’s worth noting that the major open-source vendors that are competing with heavyweights OpenAI and Anthropic aren’t exactly grassroots open-source projects. Rather, both are multi billion initiatives backed by companies that just so happen to have open model architectures. Capital intensity and the GPU still remain supreme in AI development of cutting edge models.

Implications for the Future

The question of whether open-source or proprietary models win is the ultimate question in the future of software and AI. Are we reaching diminishing marginal returns on each incremental model? Or will capital intensity and talent prevail, leading to a monopoly / duopoly situation of proprietary vendors?

This year, OpenAI’s training and inference costs are projected to reach $7 billion, while Anthropic will spend $2.5 billion. Notably, OpenAI’s computing expenses are more than 5X its employee costs, while for Anthropic, they are nearly 10X. Looking ahead, training costs are expected to stabilize, and inference costs are predicted to drop significantly, potentially leading to better margins. Currently, computing costs exceed total revenue for both leading model developers, which is unsustainable.

Despite the massive cash burn required to compete in AI, open-source models have caught up, with Meta and Mistral leading the way. Both have substantial funds to stay competitive, with Meta allocating $20 billion and Mistral raising nearly $1 billion. It’s clear that open-source AI is no longer constrained by GPU availability, and OpenAI and Anthropic will need to excel in areas beyond sheer compute volume.