The AI Infrastructure Era: Profit in Act I, Build Moats for Act II

A framework for separating today’s commercialization paths from tomorrow’s platform winners

Written with my colleagues Sunil Potti and Jake Flomenberg.

Enterprise infrastructure is entering a bifurcated cycle: an immediate scramble to operationalize AI under extreme resource constraints (Act 1), followed by a structural rewrite of organizational architecture itself (Act 2). The firms that win the next decade will bridge these worlds — capturing momentum now while accumulating long-duration advantage as AI becomes the primary consumer and producer of work.The evolution of enterprise infrastructure can be viewed as a two-act play.

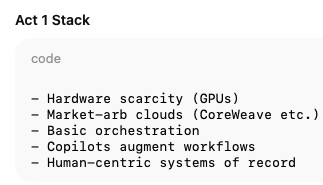

Below are the key needs and constraints defining each act.

Act 1:

Supply-side constraints (GPU scarcity, infra fragmentation).

Tools that accelerate building (acceleration, security, orchestration).

Many will be temporary arbitrage plays; some will become rails.

Act 2:

A structural rewrite of “how work happens.”

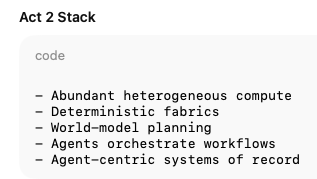

Systems treat agents as organizational primitives.

Entire SoRs (AD, HRIS, ERP, IdPs, ticketing) get replaced.

Act 1 is the AI-native momentum phase: a period of acute infrastructure bottlenecks, new AI fabrics, and a proliferation of tools for not just building/securing but accelerating/unblocking AI-native applications and processes. Many of these bets may likely not endure into meaningful companies but could be good investment outcomes nonetheless.

Act 2 represents the structural transformation phase: where firms that dominate Act 2 will emerge not from “micro” rewrites using AI, or tooling where the foundational layers can eat into over time, but from foundational redefinitions of “how work happens” AND “lessons learned” from Act 1 that help create more enduring systems — imagine an enterprise org hierarchy not just being defined by employees but also agents as first class organizational elements - entire systems of record such as HR and core infrastructure services such as Active Directory will need to be reimagined.

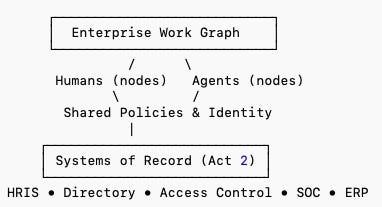

So the strategic opportunity for us lies in balancing these acts — making targeted investments across both acts, balancing near-term momentum with long-term structural vision. This involves a 3 pronged approach:

Act 1 Investments: These investments will focus on the “tracks” of the current AI build out. Opportunities in this category are driven by immediate, intense demand and clear market dislocations, such as the ongoing GPU shortage. The key discipline here will be to differentiate between durable infrastructure plays and temporary arbitrage opportunities that will fade as the market matures.

Act 2 Investments: These investments will target “category creation” opportunities that fundamentally rewrite existing software and security paradigms. These are higher-risk, longer-horizon ventures that require a deep conviction in a company’s vision to see beyond the limitations of the current stack. (eg ..?)

The Bridge (Act 1+2): We also believe there will be valuable investments in companies whose Act 1 products are necessary to solve today’s pressing problems but are architected to become the system of record for the Act 2 paradigm. These are the platforms on which we will “double down,” as they offer the potential for both near-term revenue and long-term, defensible market leadership (eg Theom to not just replace current data classification, data lake protection solutions but also as a foundational layer for enterprises to rely for on a data governance platform to unlock agentic deployments).

II. Looking ahead to Act 2 structural transformation and working backwards into Act 1 and Bridge Opportunities

Some themes are proposed in the visual for discussion with some details on a few below:

Democratization of AI Clouds

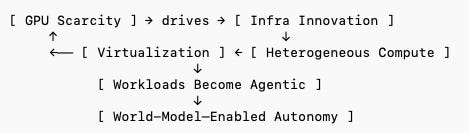

Today’s compute economy is defined by scarcity — the “GPU-constrained” world.

Neo-clouds (CoreWeave, Nebius, Lambda Labs, Crusoe, etc.) and cloud fabrics that stitch across hyperscalers have emerged to address this shortage. These players capture short-term, ?-margin value in solving supply-side inefficiency while setting the stage for longer-term abstraction layers. We first wrote about this phenomenon in 2023 with “The Financialization of the Data Center” piece. Some will make it to Act 2, others won’t.

The next step will come from eventual democratization of GPU supply (e.g. multiple GPU makers, valid non-GPU options such as TPUs becoming mainstream etc), but also from virtualization and workload orchestration that lets developers treat heterogeneous compute as fungible while also serving local markets with a latency sensitive orientation. Sovereignty concerns will also play into the possible democratization of “country specific” GPU clouds.

We also believe future acts will be defined by heterogenous compute — across other types of GPUs, TPUs, ASICs and the CPU. This shift will have massive derivative effects across enterprise infrastructure.

LLMs ⇒ World Models

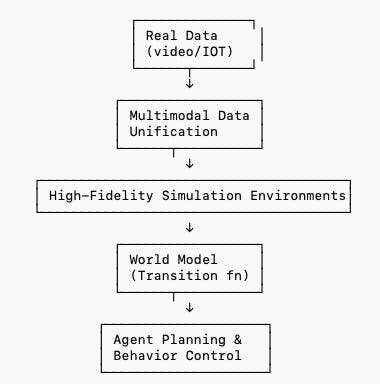

This is currently a deeply discussed and contested discussion in the AI community. Current LLMs possess a remarkable ability to process and generate text, but they lack a true, underlying understanding of cause and effect. The next frontier in AI research is the development of “World Models.” A world model is an internal simulation of an environment that allows an agent to predict the consequences of its actions before taking them and builds upon the mathematical underpinnings of Markov Decision Processes (MDPs) which is a model sequential decision-making in situations with uncertain outcomes. Formally, it learns the transition function of the environment, or the probability of ending in a new state s’ given the current state s and an action a, expressed as P(s’|s,a).

Recent research has shown that today’s LLMs can be “induced” to perform basic world model functions, such as determining the preconditions for an action and predicting the resulting state change. This is a foundational step toward creating agents that can truly reason, plan, and operate in complex, dynamic environments.

Some example areas of interest include:

Advanced Simulation Environments: Platforms capable of generating vast quantities of high-fidelity synthetic data, which will be essential for training models to understand complex cause-and-effect relationships in a controlled manner. Simulation-first companies include Waabi, Applied Intuition etc and Synthetic Data Platform companies include Synthesis AI, Datagen, Sky Engine AI etc.

Data Platforms for Multimodal Reasoning: The real world is not just text. World models will need to ingest and correlate information from a wide array of sources, including video streams, sensor data from IoT devices, and structured logs from enterprise systems. Investing in the data platforms that can unify and process this multimodal data is a tangible way to invest in the future of world models. Categories here include Physical Video and Sensor platforms

Robotics/IoT data platforms with companies like Viam, Formant etc. Even a next gen storage can be envisioned here for storage platforms purpose built for large scale simulations and multimodal training.

The real enterprise unlock is not synthetic environments — it’s stable, predictable agent behavior. World models are the missing reliability layer.

Agents ⇒ Things

Act 2 extends the agentic wave into the physical domain — robots, sensors, and “things” with agency. This transformation moves AI from desktops and servers into real-world decision loops — factories, logistics, and healthcare. Emerging players like Sanctuary AI, Figure, and Covariant represent the early prototypes of this convergence. These “things” may require a new hardware stack to enable them and the thinking will likely be split between on device and in the cloud.

Edge inference + local autonomy ← critical for sovereignty + latency

A hybrid stack: on-device reflexes + cloud-level planning

Act 2 physical systems will adopt a layered control stack: reflexive inference at the edge, and world-model-driven planning in the cloud.

Micro ⇒ Chunky SaaS

Workflows themselves will be rewritten from first principles. Instead of AI co-pilots within human-centric systems, Act 2 SaaS will be AI-first orchestration platforms that treat humans as optional participants in the loop. This could produce AI-native ERP, finance, and HR systems — startups like Doss and Quanta hint at this future.

Chunky SaaS collapses dozens of micro workflows into autonomous processes that produce outputs, not dashboards. If Act 1 gave us copilots, Act 2 gives us “self-driving back-office systems.”

III. The Bridge - areas where Act 1 monetization builds into Act 2 defensibility:

We discuss at depth the Agentic Cyber and Agentic Lifecycle themes in other pieces.

A brief overview of the AI Networks theme is included below (a detailed introduction into this topic is here.

Why: Almost all clouds are at <50% GPU utilization with the primary bottleneck for scaling AI being the network. Traditional networking was built for statistical multiplexing (many small, independent jobs), but large-scale AI training operates as a single, massive, synchronized job across tens of thousands of accelerators, demanding all-to-all communication where the worst-case latency dictates job completion time.

What: The solution is to evolve from a reactive, “best-effort” network to a proactive, deterministic, and “perfectly scheduled” fabric. The goal is to make the network as predictable as an on-chip interconnect, removing random delays and jitter that stall expensive accelerators.

How: This is achieved through a co-design of hardware and software including next gen data center components such as Attotude, Enfabrica and discrete “sub systems” that offload transport and congestion control from the CPU, nanosecond-level time synchronization across the data center, allowing the network to precisely schedule every single data packet and ensuring a predictable, ultra-low-latency, and near-flawless fabric

V. Summary

We believe some of the most successful investments will capture Act 1 economics while accumulating Act 2 optionality.

The winners will exhibit a unique trait: Act 1 capital efficiency, Act 2 architectural inevitability.