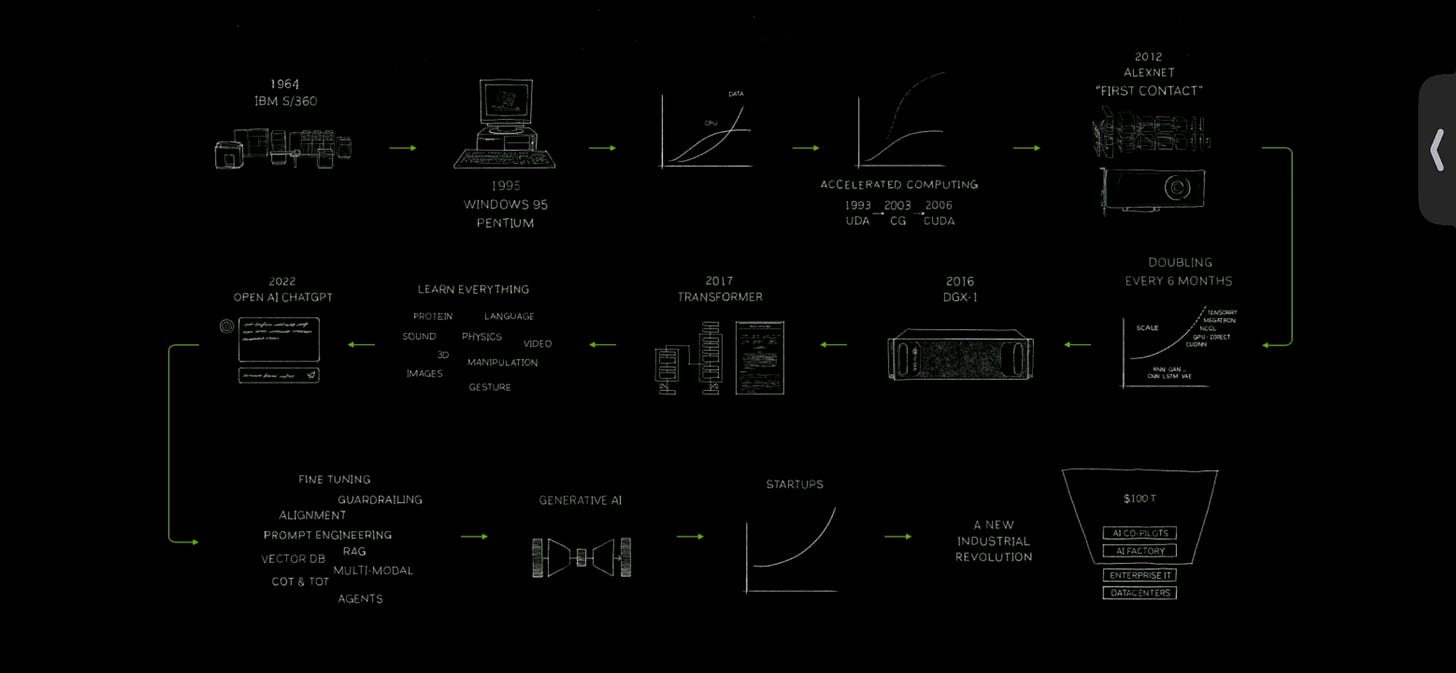

The Major Computing Cycles: from IBM to NVIDIA, CUDA and the Generative AI age

Charting the Evolution of Computing: From Mainframes to AI Revolution and the Rise of Vector Databases

Yesterday at NVIDIA GTC in Jensen Huang’s keynote, he described the major computing cycles of the past, present and future. Highly worth a listen!

Computing has undergone remarkable transformations over the decades, with significant milestones that have shaped the technological landscape. This evolutionary timeline, particularly depicted in an image from Nvidia, delineates a fascinating journey from the era of IBM's System/360 in 1964 to the sophisticated AI models of 2022, such as OpenAI's ChatGPT. We discuss each era below.

Mainframe to Personal Computing: The Seeds of Change

The IBM System/360 represented the first major cycle of computing, encapsulating an era where computers filled entire rooms and were accessed by a privileged few. This centralization of computing power was the norm until the late 20th century. By 1995, with the introduction of Windows 95 running on Intel's Pentium processors, computing became personal. PCs democratized access to computing power, fostering a generation of creators, developers, and businesses.

The Acceleration Era: GPUs and Parallel Processing

The rise of graphics processing units (GPUs) marked a pivotal shift in computing capabilities. Nvidia's CUDA, introduced in 2006, was a groundbreaking innovation that allowed for parallel processing, making it possible to handle complex calculations more efficiently. CUDA became a landmark library for developers in AI. This technology paved the way for accelerated computing, which bolstered advancements in 3D graphics, scientific computation, and, eventually, machine learning — leading to the new wave of AI infrastructure in the data center we are in today.

AI and Deep Learning: A New Frontier

In 2012, AlexNet's victory in the ImageNet competition, dubbed "First Contact," heralded the deep learning revolution. GPUs became the engine of AI, driving rapid progress in neural network performance. This led to the concept of AI doubling in capability every six months—a stark contrast to the more gradual pace of earlier computing advancements.

Generative AI: The Birth of Creative Machines

Fast forward to 2017, the introduction of Transformer models like GPT and BERT marked a new phase in generative AI. These models showcased an AI's ability to learn from vast amounts of data and generate human-like text, opening up possibilities for applications in language translation, content creation, and even in generating code. NVIDIA highlights OpenAI’s chatGPT, the first AI application to reach 100M users.

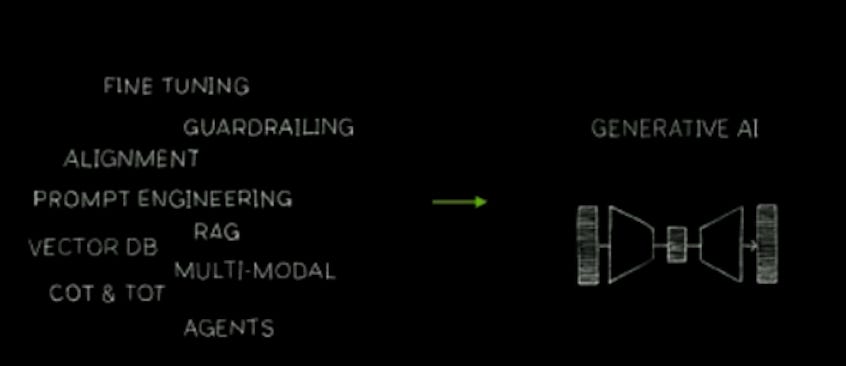

In the realm of Generative AI, a significant development has been the introduction of systems like RAG—Retrieval-Augmented Generation. This hybrid approach marries the best of generation and retrieval systems, leveraging the vast knowledge embedded in large databases while utilizing the creative prowess of generative models. RAG systems first retrieve information relevant to a query from a dataset and then use this context to generate a coherent and informed response. This technique enhances the generative model's outputs with precision and factual accuracy, overcoming one of the traditional limitations of pure generative systems that can sometimes fabricate details or miss nuances contained in actual data.

The relevance of RAG systems to the broader concept of "Generative AI" cannot be overstated. In an era where the distinction between real and AI-generated content is increasingly blurred, the capacity for AI to draw from real-world information and generate verifiable outputs is invaluable. For instance, in industries such as journalism, research, and legal analysis, where factual accuracy is paramount, RAG could represent a leap forward.

Vector databases are highlighted as a pillar of AI infrastructure, led by Pinecone, which manages high-dimensional vector data (also known as embeddings) and are optimized for vector search. These databases are pivotal for tasks like similarity search in machine learning models, including those used in RAG systems, as they enable rapid retrieval of relevant information based on vector similarity. This is crucial when dealing with the high volume and dimensionality of data produced by Generative AI, allowing for quicker, more precise retrieval that can significantly enhance the generation process. The use of vector databases is indicative of the evolving needs of AI systems, pointing to a future where the infrastructure supporting AI must be as dynamic and advanced as the algorithms themselves.

2022 and Beyond: The Era of Large Language Models

In 2022, OpenAI's ChatGPT took the world by storm, signifying a maturation in AI capabilities. The sophistication of fine-tuning, alignment, and prompt engineering allowed these models to not just understand and generate text but to do so in a way that could be directed and controlled with nuanced instructions. Multi-modal capabilities, where AI could interpret and generate sound, images, and language, are becoming the norm.

The Industrialization of AI

As we look at the broader implications, it's clear that we're entering what can be described as a new industrial revolution powered by AI. The image suggests a staggering potential market size of $100 trillion, with AI at the helm of future enterprises, acting as co-pilots in businesses, fueling an AI factory model for software development, and revolutionizing enterprise IT and data centers.