From Niche to Necessary: Credo’s Quiet Domination of AI Interconnects

How Credo Semiconductor became a $12B critical link in the AI data center

The AI data center is being re-architected in real time. We’ve already covered Astera Labs’ IPO and highlighted other key beneficiaries of the AI compute boom—Broadcom’s custom ASICs and networking giants like Mellanox and Arista Networks. Today, we’re turning our attention to another once-niche vendor that’s quickly becoming a critical pillar of the AI-first data center: Credo Semiconductor.

At the center of this transformation is bandwidth. Training LLMs and serving real-time inference workloads at scale requires unprecedented interconnect density and performance—between GPUs, switches, CPUs, and storage systems. Each NVIDIA H100 server can burn 700W+ per card and demands 3.2Tbps+ of bi-directional communication. That’s not just a compute challenge—it’s a connectivity one. How do you move data at the required speeds to feed AI models the information they need?

Credo Semiconductor has quietly emerged as one of the most essential—but underappreciated—pieces of this new AI infrastructure stack. Unlike companies building the GPUs or LLMs, Credo is building the lanes the AI traffic rides on.

Credo’s Role in the AI Data Center Stack

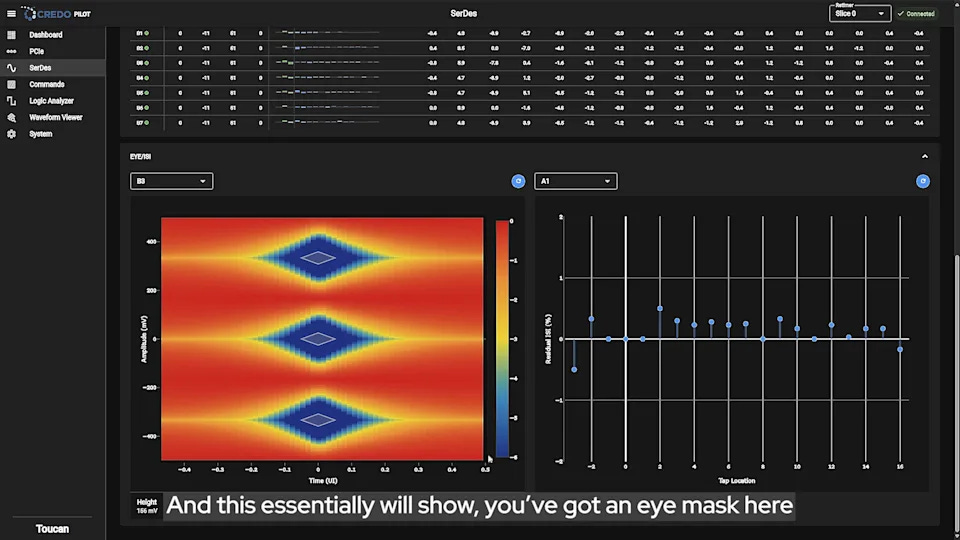

1. High-Speed SerDes and Retimers

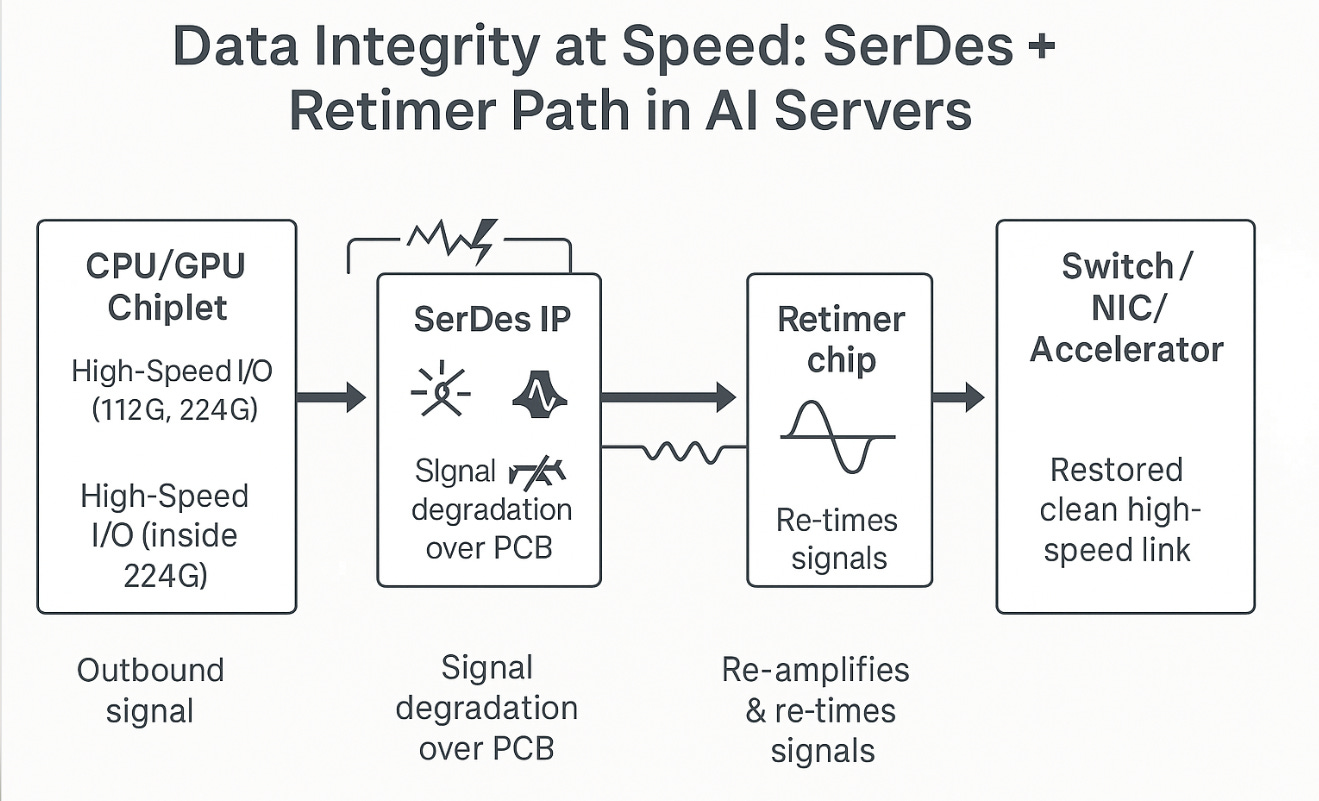

At the heart of modern AI servers is the need to move enormous volumes of data between GPUs, CPUs, and memory with minimal latency and signal loss. Credo’s SerDes IP (Serializer/Deserializer intellectual property) and retimer chips enable this by supporting PCIe 5.0, 6.0, and emerging PCIe 7.0 standards, as well as CXL (Compute Express Link)—all essential for high-throughput, low-latency connections inside GPU servers. PCIe 6.0, for instance, runs at 64 GT/s per lane, meaning a 16-lane (x16) link can deliver up to 256 GB/s of bi-directional bandwidth. But signal integrity degrades over long traces and dense boards—especially at 112G and 224G line rates. That’s where Credo’s retimers come in: they clean and re-amplify high-speed signals, restoring data integrity between chips, boards, and system components.

This is foundational in GPU-rich architectures like NVIDIA NVLink, Broadcom Tomahawk switching fabrics, or AMD MI300-based systems. Without high-performance SerDes and retimers, these clusters don’t scale.

2. AECs as Optical Alternatives

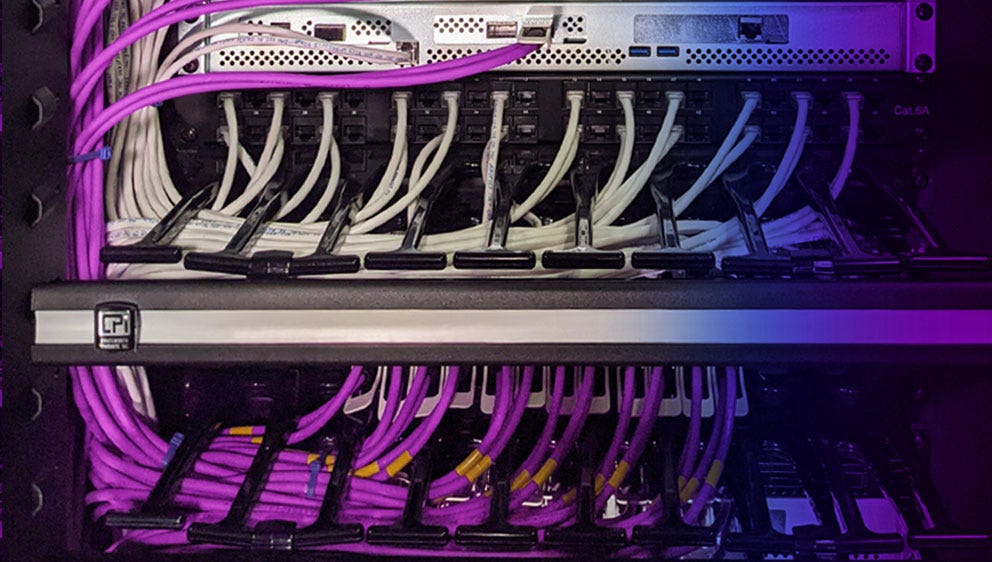

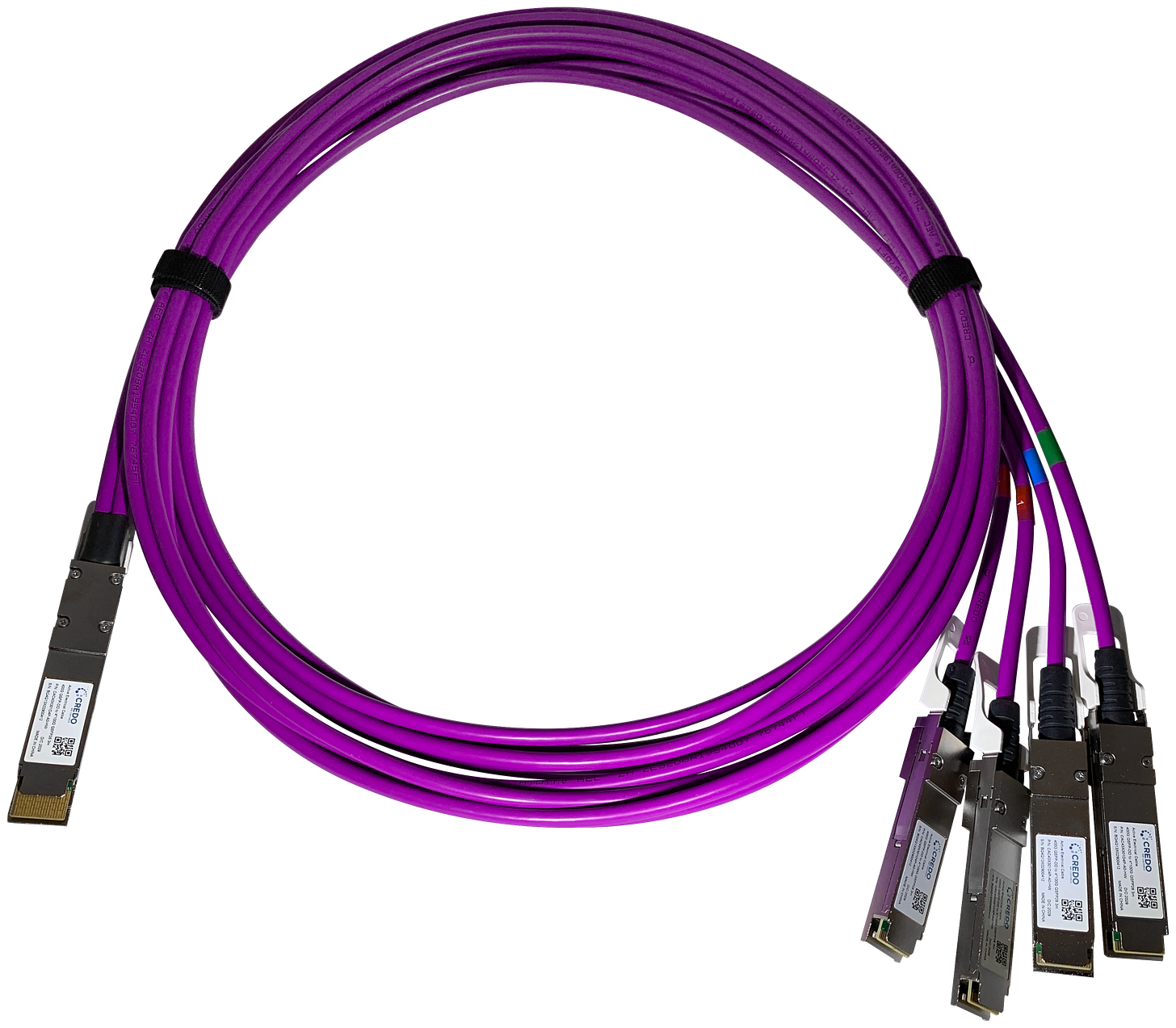

While optical transceivers dominate at 500m+, Active Electrical Cables (AECs) are emerging as a cost-effective, power-efficient interconnect for short reach (<5m) links. Credo’s ZeroFlap™ AECs offer >100× reliability improvements over active optical cables (AOCs) and have quickly become the go-to solution for top-of-rack to server interconnect in AI clusters.

As hyperscalers shift toward co-packaged optics and tighter thermal envelopes, AECs offer critical advantages in density, cost, and thermals. Credo is now considered a category-defining vendor in this segment.

3. PILOT: Software as a Differentiator

Credo’s PILOT platform, launched in 2024, adds observability and diagnostics to AEC and DSP links—making its products more “software-defined.” This aligns with the broader trend in infrastructure where hardware alone isn’t sticky enough. PILOT gives hyperscalers deeper telemetry and reliability—essential for maintaining massive AI fabrics.

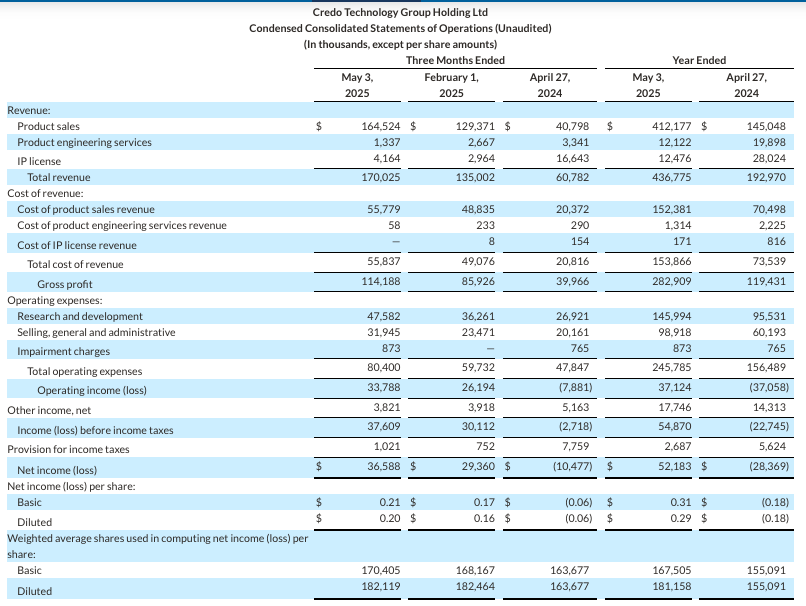

Financial Performance: AI Product-Led Acceleration

Annualized Revenue: $680M, up 26% quarter-over-quarter and 180% year-over-year

Gross margins: 67%

Annualized net income: $148M

Market Cap: $12.4B, up 148% year-over-year

The growth is almost entirely product-driven—particularly from AECs and SerDes retimers, which hyperscalers are deploying at massive scale. Licensing (historically ~15% of revenue) has become less relevant as products shipments dominate sales.

Strategic Positioning and Conclusion

Credo Semiconductor exemplifies the second-order beneficiaries of the AI boom—companies not training models or building LLMs, but infrastructure for them to scale. Their mix of differentiated silicon, system-level innovation, and increasing software control gives them a long runway in hyperscale AI infrastructure.

Category Creator in AECs: Credo leads a fast-growing segment with IP and manufacturing advantages.

Vertical Integration Without Capex: Uses a fabless model but maintains control over ASIC and packaging design, balancing gross margin and capital efficiency.

Embedded in Hyperscaler Pipelines: With adoption across AWS, Microsoft, and now reportedly xAI, Credo is becoming core to 800G+ and 1.6T data center designs.

Long-Term IP Flywheel: As PCIe 7.0 and CXL 3.0 adoption grows, Credo’s IP licensing business may see a second wind—especially among custom silicon teams at hyperscalers.

For VCs, Credo is the kind of company that would be nearly impossible to back at seed today—but serves as a reminder: the AI stack is broad, and the infrastructure tail is long. Tomorrow’s Credos will emerge from similarly narrow technical wedges—SerDes, interconnects, thermal systems, memory fabrics—and quietly compound into critical AI infrastructure leaders.