The 5 Raw Inputs Powering the AI Data Center Boom

How real estate, capital, compute, energy, and networking are the defining infrastructure inputs for the AI data center construction boom

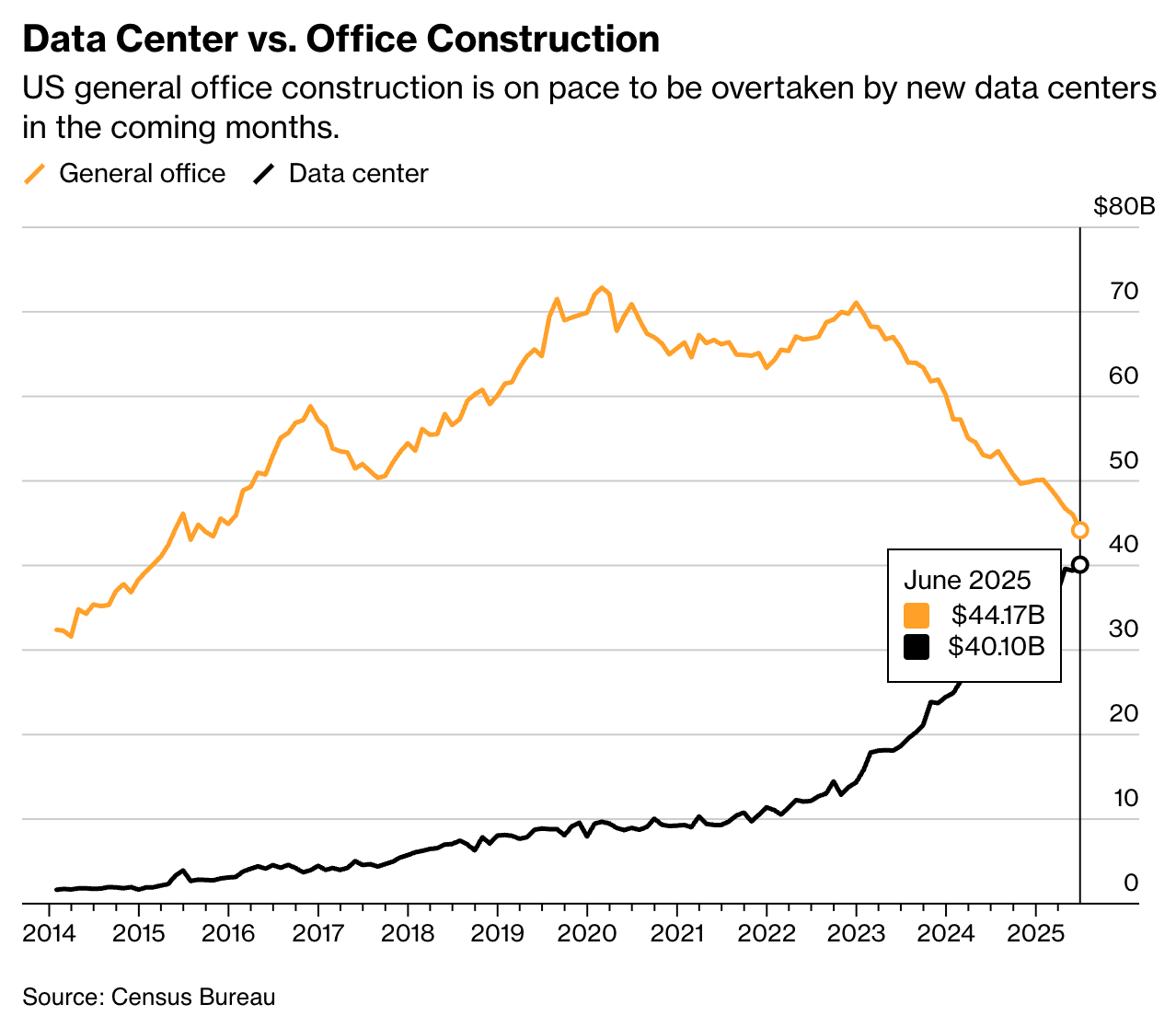

Source: Bloomberg and Census Bureau [link]

Intro: Data Centers Surpass Office Construction in 2025

This year, annual spending on new data centers is set to overtake general office construction. 10 years ago, US data construction was a paltry $2B compared to over $33B of office construction spend. After a decade of consistent growth up until COVID, office development has sharply declined from its 2020 peak, while data center investment has surged—driven by the AI arms race. This divergence underscores a profound shift in commercial real estate priorities: the physical backbone of the digital economy is becoming more valuable than the spaces where people work in person. AI’s insatiable appetite for compute, storage, and low-latency connectivity is reshaping the built environment at unprecedented speed.

1. Real Estate

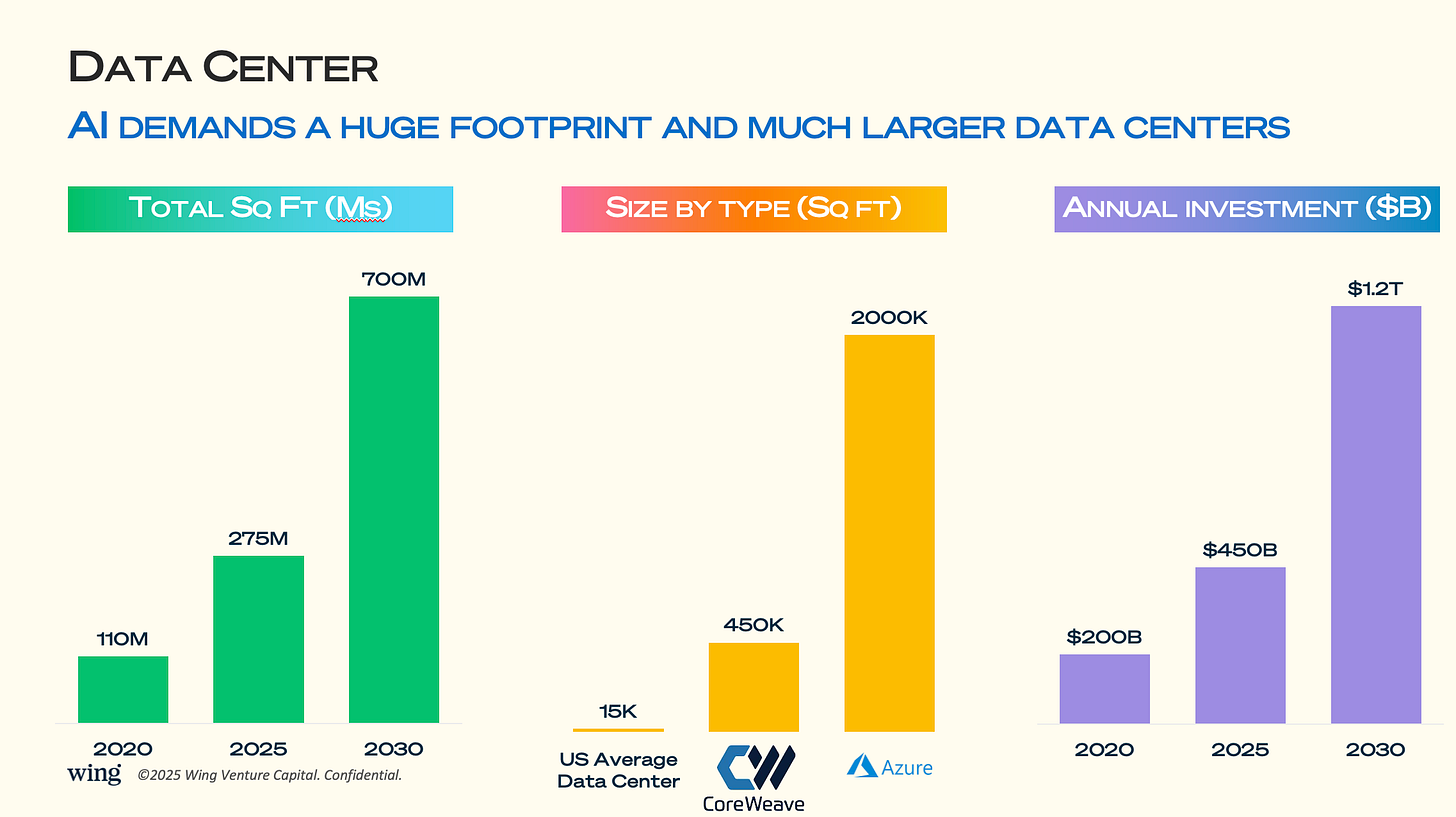

The first key input to the build of the AI data center is real estate. The footprint of AI-driven data centers is scaling dramatically, from 275M sq ft in 2025 to 700M sq ft by 2030. The footprint of AI data centers looks more like a crypto mining data center than a traditional data center.

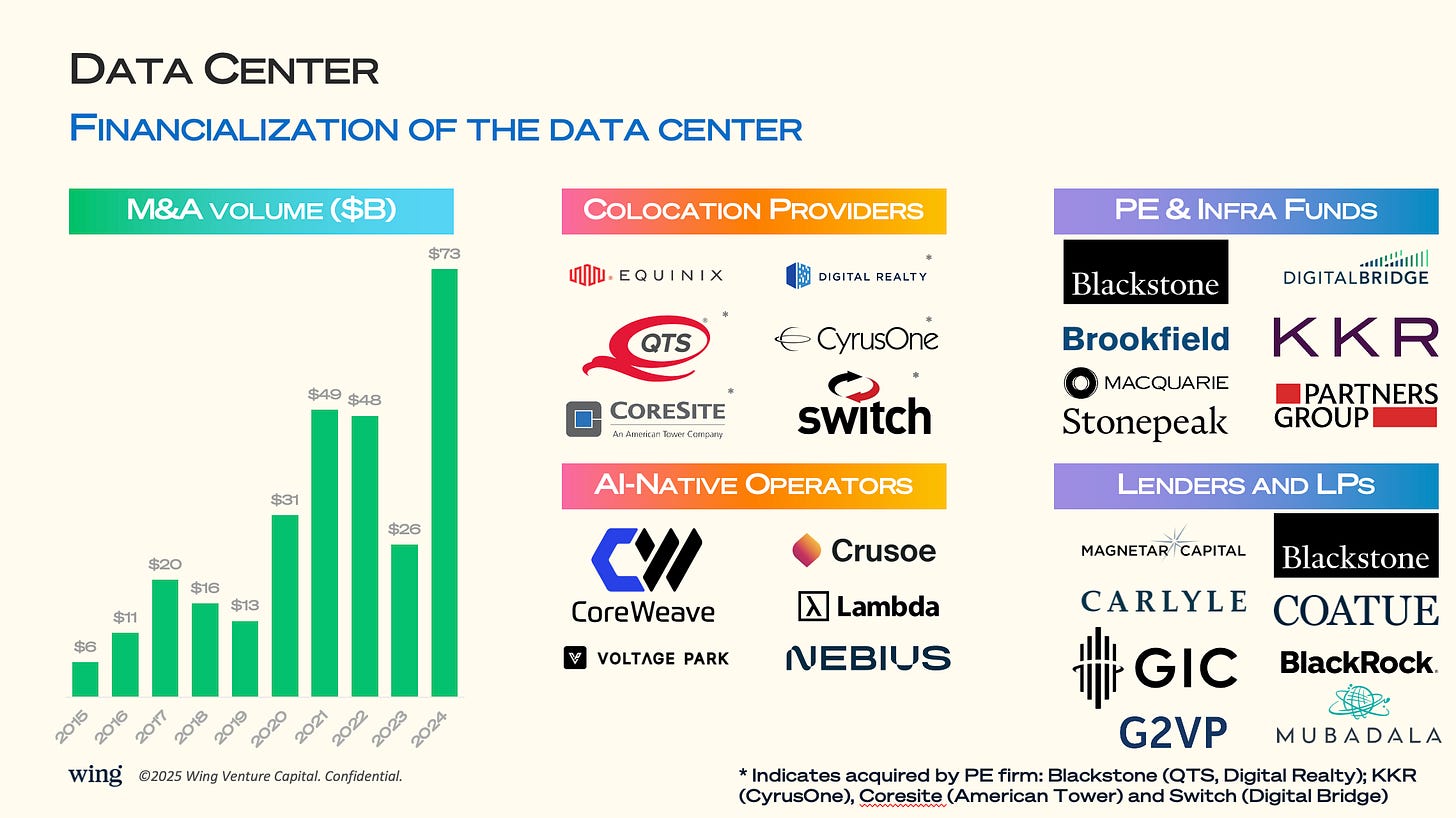

The real estate footprint of AI data centers is radically different than the traditional data center defined by Equinix and population density. Facilities are also getting physically larger, with hyperscale operators like Azure building campuses exceeding 2M sq ft—over 100× the size of a typical U.S. data center. AI data centers optimize for cheap land and cheap electricity, while traditional data centers optimized for bandwidth and proximity to population centers. CoreWeave’s 450K sq ft facilities illustrate the new “AI-native” build standard, optimized for high-density racks and liquid cooling. This expansion will demand favorable zoning, proximity to high-capacity fiber routes, and access to reliable power sources. The result: data center real estate is becoming one of the most competitive and strategic asset classes globally, representing more than 30% of Blackstone’s flagship fund. We wrote about this trend in 2023 and the financialization of the data center has only become stronger.

Notable facts:

Blackstone now owns more data center square footage than some of the largest REITs, following its acquisitions of QTS and stakes in Digital Realty.

DigitalBridge reports that AI tenant deals are often signed with prepayment or multi-year upfront commitments—rare in traditional colocation.

2. Capital

Data center development has become deeply financialized, with M&A volume hitting $73B in 2024. Private equity and infrastructure funds like Blackstone, KKR, and DigitalBridge are aggressively consolidating colocation providers, betting on multi-decade AI infrastructure demand. AI-native operators—CoreWeave, Crusoe, Lambda—are attracting both equity and debt financing to expand capacity quickly. Capital intensity is enormous: annual investment is projected to grow from $450B in 2025 to $1.2T by 2030. For investors, the blend of long-term leasing, mission-critical status, and AI-driven growth makes data centers a premier infrastructure play.

Notable facts:

Private equity buyers are paying EV/EBITDA multiples in the mid-teens for hyperscale-ready assets, a premium over historical averages.

AI-native operators like CoreWeave have secured multi-billion dollar credit facilities backed by GPU collateral, effectively making compute a financeable asset class.

3. Compute

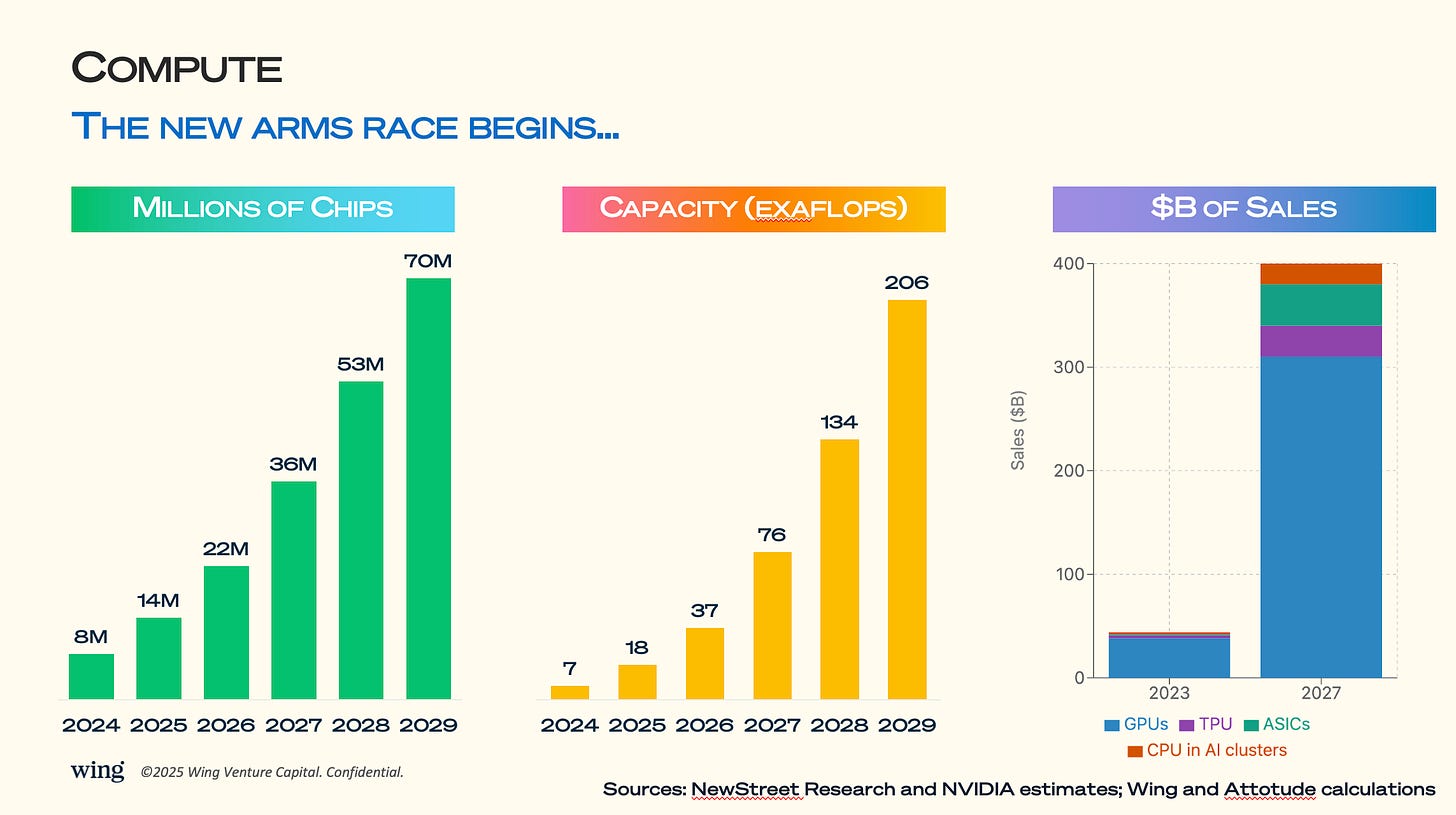

The compute layer is entering an unprecedented growth phase, with chip shipments set to grow from 14M in 2025 to 70M by 2029. Only capital rich companies have the necessary compute to compete in AI, leading to a fragmentation of GPU rich and GPU poor companies. Aggregate AI compute capacity will surpass 200 exaflops by the end of the decade. Nvidia dominates the current revenue pool—GPUs represent the bulk of a projected $400B in AI chip sales by 2027—while TPUs, ASICs (i.e. by cloud providers), and AI-optimized XPUs (i.e. by Broadcom) also gain share. This growth is driving massive supply chain and manufacturing investments, from advanced packaging to high-bandwidth memory. In the AI era, data center competitiveness is increasingly a function of compute density per megawatt.

Notable facts:

Nvidia’s current lead in AI accelerators gives it ~80% market share in data center GPUs, with gross margins exceeding 75%.

HBM (High Bandwidth Memory) production is a key supply constraint—Samsung, SK Hynix, and Micron are all racing to expand capacity, with HBM prices up >30% YoY.

By 2027, an AI training cluster capable of 1 exaflop may cost $500–$800M in hardware alone, not including networking or facilities.

Arm-based CPUs are quietly gaining share in inference-heavy AI clusters due to lower power draw per token processed.

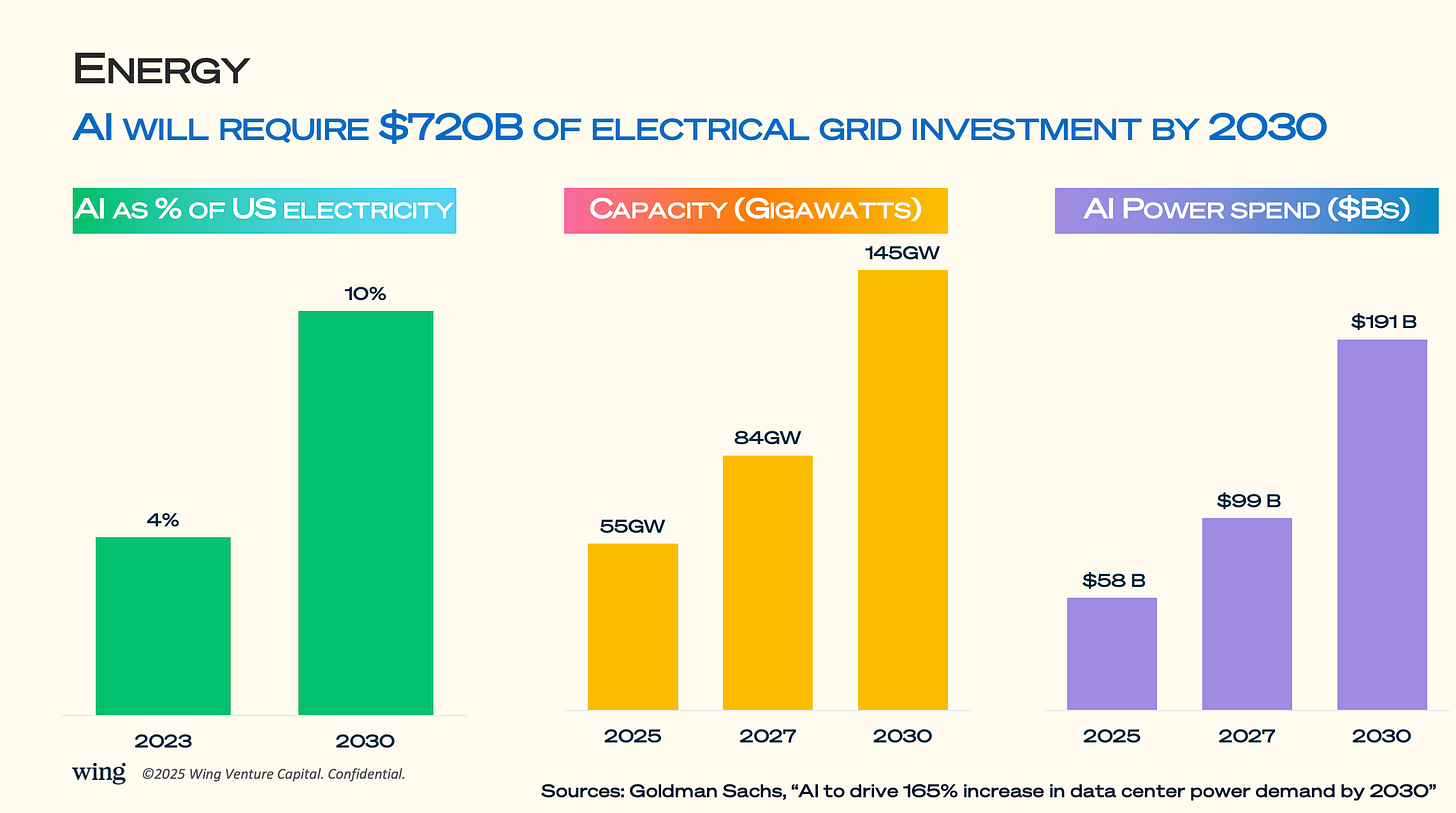

4. Energy

AI’s energy needs are accelerating at a scale that will reshape national grids. By 2030, AI workloads could consume 10% of total U.S. electricity, up from just 4% in 2023. Meeting this demand requires adding 90GW of capacity between 2025 and 2030—equivalent to building dozens of new nuclear plants or thousands of large-scale solar farms. AI power spending will nearly triple from $58B in 2025 to $191B by decade’s end. This dynamic makes energy sourcing and sustainability not just ESG talking points, but competitive differentiators in AI infrastructure.

The AI power ramp—an extra 90GW by 2030—is roughly equal to adding the total current generation capacity of the United Kingdom.

Data center PUE (Power Usage Effectiveness) averages ~1.2 for hyperscale, but AI training loads can push it higher due to extreme cooling needs.

Some operators are exploring direct nuclear supply—Microsoft has hired nuclear engineers and filed for SMR (Small Modular Reactor) licenses.

Curtailment agreements with renewable providers are being renegotiated to prioritize AI data center loads over less profitable grid exports.

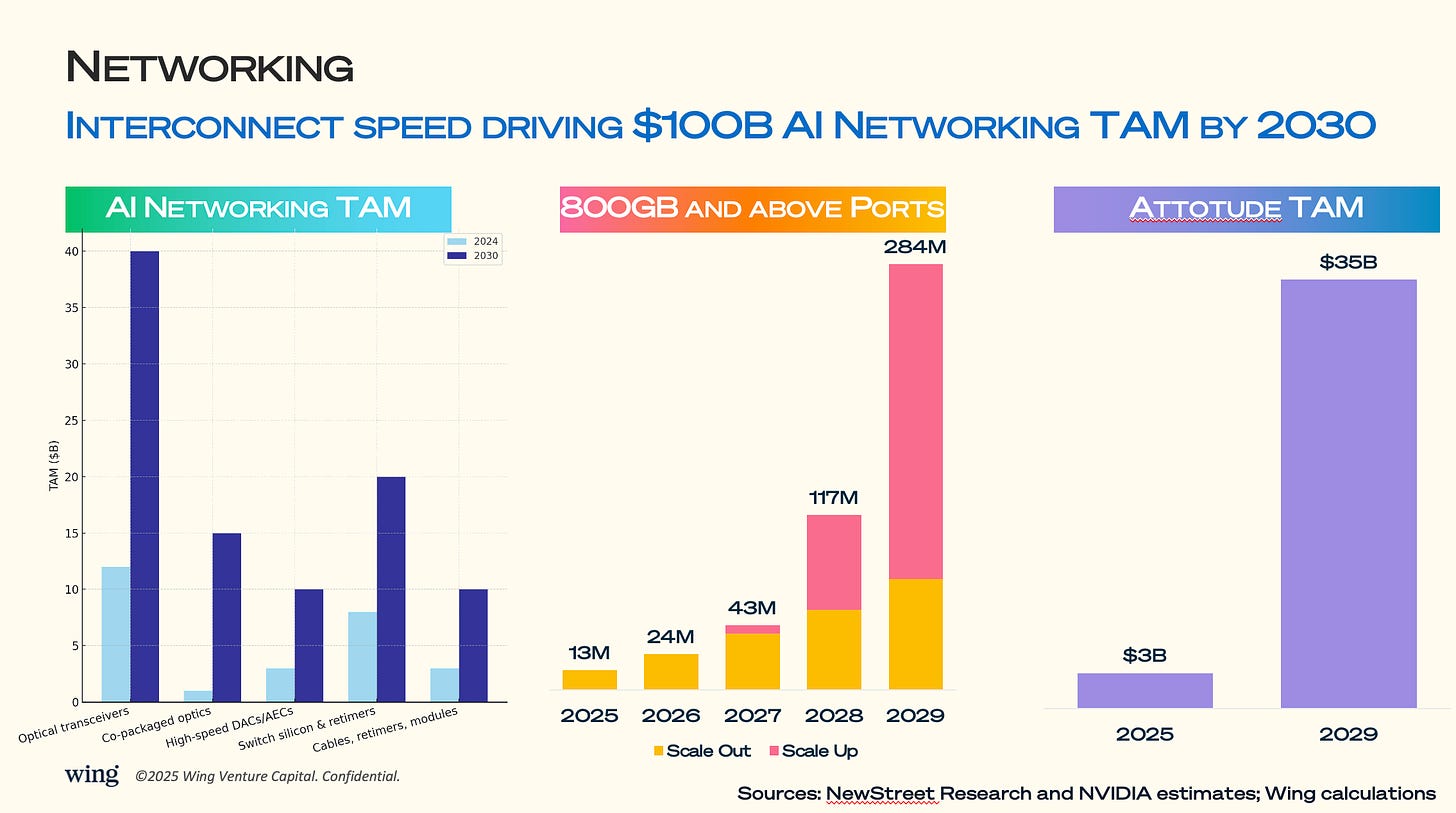

5. Networking

High-performance networking is the circulatory system of AI data centers, with the total AI networking TAM projected to hit $100B by 2030. Leading players include Arista as the pure independent and NVIDIA, which has quietly grown its networking business to >$10B of sales after its acquisition of Mellanox. Optical transceivers alone will grow from a ~$12B market in 2024 to $40B by 2030, driven by the shift to 800Gb and above interconnects. The volume of 800Gb ports is expected to surge from 13M in 2025 to 284M by 2029, enabling both scale-out and scale-up AI clusters. Attotude’s low-latency interconnects, a $35B market by 2029, will be critical for tightly coupled GPU workloads. As models scale, the bottleneck shifts from compute to moving data fast enough between chips, nodes, and sites.

The leap from 400Gb to 800Gb optical links can reduce model training time by double-digit percentages in tightly coupled GPU clusters.

Co-packaged optics, still <5% of the market today, could surpass $15B TAM by 2030 as switch ASIC power budgets become limiting factors.

The Infiniband vs. Ethernet battle is intensifying: Nvidia’s Quantum-2 Infiniband dominates training clusters, but cloud providers are pushing Ethernet-based RDMA to cut costs.

Retimer and cable costs can account for >20% of the total networking bill in AI facilities—an often-overlooked capex driver. See our Astera Labs S1 review.